As AI screening becomes regulated, recruiters need decisions they can explain, document, and audit. This guide covers the EU AI Act’s high-risk expectations, NYC Local Law 144 bias-audit and notice requirements, and the practical controls HR should demand from vendors. You will also learn how audit trails, real human oversight, and ongoing fairness monitoring make Explainable AI in Hiring defensible at scale.

Table of Contents

AI has become a practical necessity in recruiting. Resume volume keeps growing, hiring teams stay lean, and candidates expect quick responses. At the same time, regulators are pushing organizations away from “black box” screening toward documented, explainable decisions—especially when AI influences who gets interviewed or hired.

For more information on CloudApper AI Recruiter visit our page here.

That shift is happening for a simple reason: hiring is a high-stakes decision. When an algorithm amplifies bias or can’t explain outcomes, the risk is no longer just operational. It becomes legal, reputational, and brand-damaging.

One stat puts the urgency in perspective: SHRM reported that 92% of people who click “Apply” never finish an online job application. That drop-off is a business problem, but it’s also a compliance issue when rushed fixes introduce opaque automation.

(Quick note: This article is informational, not legal advice. For specific compliance decisions, involve legal counsel.)

The new compliance reality: AI in hiring is getting regulated like a high-risk system

EU AI Act: employment AI is treated as “high-risk,” with phased deadlines

Under the EU AI Act’s risk-based approach, AI used in employment and worker management is categorized as “high-risk” (meaning it triggers stronger obligations around risk management, documentation, oversight, and record-keeping).

Importantly, the EU AI Act is phased, not “all at once.” The European Commission’s own timeline indicates progressive application with full roll-out foreseen by 2 August 2027.

There’s also live policy movement: Reuters reported in November 2025 that the European Commission proposed delaying stricter “high-risk” AI rules to December 2027 (from an earlier timeline), as part of a broader “Digital Omnibus” simplification package—subject to debate and approval.

What this means for HR leaders: even if dates shift, the direction doesn’t. “Explainability,” documented controls, and auditability are becoming baseline expectations.

New York City Local Law 144: bias audits and disclosure are already required

NYC’s Local Law 144 regulates “Automated Employment Decision Tools (AEDTs).” The city states employers can’t use an AEDT unless the tool has had a bias audit within one year, the results summary is publicly available, and candidate/employee notices are provided. Enforcement began July 5, 2023.

Researchers have also described NYC’s law as the first major mandate requiring bias audits for hiring tools, signaling where other jurisdictions may go next.

U.S. enforcement pressure isn’t only “local”

Even where there isn’t a single federal AI hiring law, U.S. risk is real. The EEOC has repeatedly emphasized that AI tools can still violate anti-discrimination laws when used in employment decisions. EEOC

And Reuters has highlighted a broader trend: state and local oversight is expanding, litigation is testing vendor and employer liability, and employers are expected to proactively monitor AI outcomes—not just rely on vendor claims.

“Explainable AI” in recruiting: what regulators and candidates actually want

Explainable AI in hiring isn’t about showing the source code of a complex model. In practice, organizations are being pushed to answer these questions clearly:

- What inputs drive the recommendation? (skills, experience, responses, job criteria)

- How are candidates scored and ranked? (defined criteria, consistent application)

- What evidence is logged? (audit trails, recruiter actions, overrides, changes)

- How do you monitor fairness over time? (ongoing measurement, not one-time testing)

This is why the EU AI Act emphasizes controls like record-keeping and technical documentation for high-risk systems. It’s also why NYC requires an external bias audit and public reporting.

And it aligns with what candidates already feel: HireVue reported that 45% of workers see racial bias as a significant issue in hiring, while 57% believe AI could reduce racial/ethnic bias—meaning trust is possible, but only if systems are governed responsibly.

The “vendor promise” era is ending: a practical checklist HR should demand

If your TA stack includes AI screening, ranking, chatbots, assessments, or automated scheduling, treat it like a controlled system. Here’s what strong HR teams are increasingly asking for.

1) Independent bias audit readiness (not just internal testing)

If a law requires an independent audit (NYC does), you need documentation and an audit pathway—not a marketing slide.

2) Clear transparency artifacts (your “transparency report”)

Ask vendors (and internal teams) for a repeatable set of artifacts:

- What the tool is used for (screening, ranking, scheduling, etc.)

- What data it uses and does not use

- How scoring works at a high level

- How humans oversee and override outcomes

- What gets logged and retained

3) Ongoing fairness monitoring dashboards (not a once-a-year event)

Audits are a point-in-time view. Real risk comes from drift: new job families, changing labor markets, and shifting applicant pools. Your TA suite should support ongoing monitoring.

4) Human oversight that is real—and recorded

“Human-in-the-loop” must mean recruiters can review, override, and document decisions.

5) Record-keeping and audit trails that stand up under scrutiny

This is where many teams fall apart: decisions happen across emails, spreadsheets, ATS notes, and disconnected tools. A clean audit trail reduces risk.

6) Align to a governance framework (so this isn’t reinvented every quarter)

A useful starting point is the NIST AI Risk Management Framework, which is built around governing, mapping, measuring, and managing AI risk across the lifecycle.

Where CloudApper AI Recruiter fits in this new “explainable hiring” standard

CloudApper AI Recruiter is positioned as a multi-agent recruitment solution that screens, scores, and ranks candidates, while also supporting scheduling and candidate engagement.

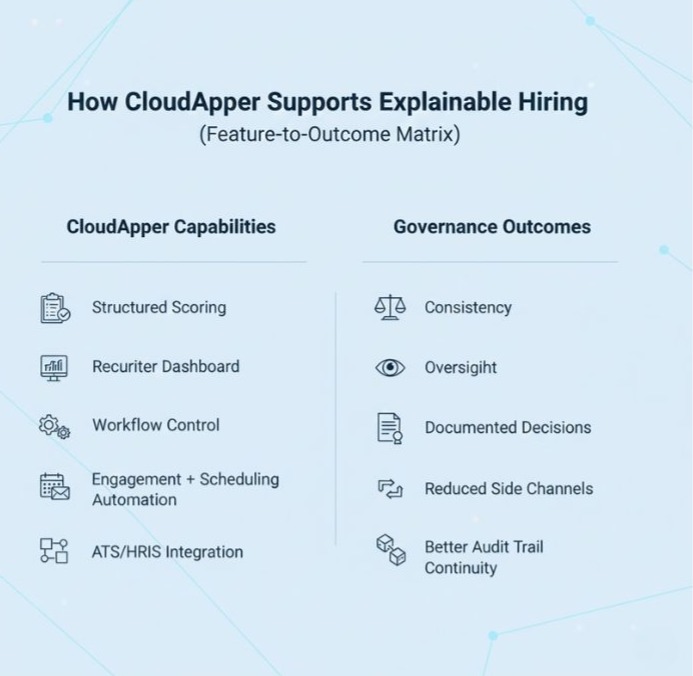

From an “explainable AI” and audit-readiness perspective, a few elements matter:

- Structured scoring + ranking with recruiter dashboards: CloudApper highlights candidate comparisons with scores and notes, plus dashboards that track hiring metrics—useful building blocks for transparent decision-making and internal reviews.

- Workflow control: Customizable workflows (via no-code configuration) help HR leaders standardize how AI is used across roles and locations—critical when regulators expect consistency and oversight.

- Candidate drop-off prevention: If 92% application drop-off is the reality many teams face, simplifying apply flows (QR code/text shortcode + conversational application) supports faster throughput without forcing recruiters into opaque automation.

- Engagement and scheduling automation: Coordinating calendars, invites, and reschedules inside a defined system reduces the “side channel” decision-making that often breaks audit trails.

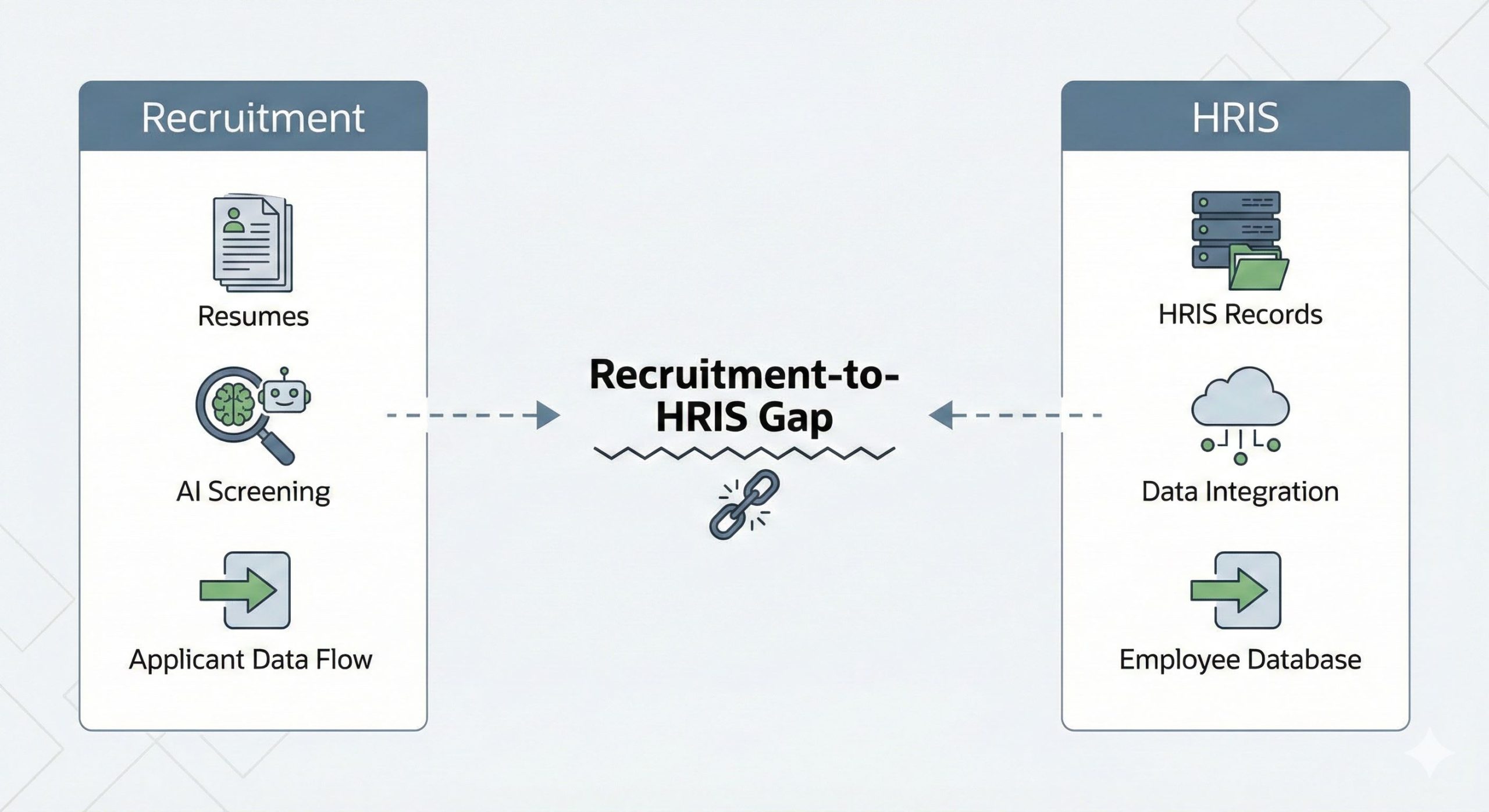

- Integration without disruption: CloudApper emphasizes working with existing ATS/HRIS systems without messy migrations, which helps teams consolidate documentation rather than creating new shadow processes.

The takeaway: as oversight tightens, the goal isn’t to avoid AI. It’s to use AI in a way you can explain, monitor, and defend—with evidence, not promises.

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions for HR

- Works with

- and more.

Similar Posts

How cNPS Reflects Your Recruitment and Employer Brand

The Recruitment-to-HRIS Gap: Why Your “System of Record” Should Start…