CloudApper AI streamlines enterprise AI adoption with its user-friendly platform, versatile integration, and strong data security, empowering businesses to navigate AI complexities and stay ahead in the evolving tech landscape.

Table of Contents

According to recent studies, Fifty-two percent of companies accelerated their AI adoption, marking a rapid shift toward embracing advanced technological solutions. Large Language Models (LLMs) and generative AI integration has offered a complicated web of obstacles and opportunities in the rapidly evolving world of corporate technology. As businesses explore the nuances of these cutting-edge technologies, they are met with a bewildering array of innovative concepts and dynamic infrastructure options that alter the trajectory of AI adoption.

Understanding the Intricacies of AI Development

The ever-evolving lexicon of AI encompasses terms like prompts, priming, embedding, tuning, chains, agents, extraction, summarization, alignment, and evaluation, all of which define the core functionalities of LLMs and generative AI. However, comprehending these concepts is merely the initial step in the journey. The dynamic nature of AI development requires continuous adaptation and integration of an ever-expanding array of tools and infrastructure components, demanding perpetual learning and adaptability from teams.

The Criticality of Technology Choices

Moreover, the selection of an operational infrastructure model, be it on-premise, cloud-based, or AI-as-a-service, holds profound significance. Each model presents unique challenges and trade-offs, impacting the efficiency, cost-effectiveness, and overall success of AI implementation. While self-managed solutions offer heightened control, cloud-based or AI-as-a-service models provide unparalleled scalability and flexibility, ushering in a new era of strategic decision-making for organizations.

Unveiling the Realities of Enterprise-Grade AI Solutions

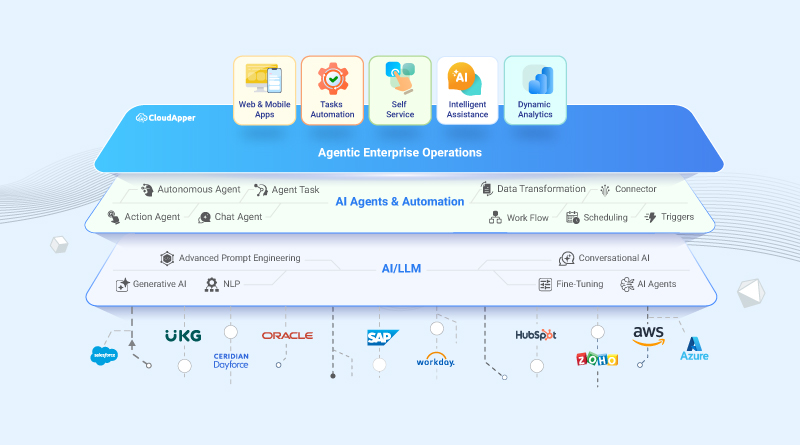

Despite the buzz surrounding AI, enterprise-grade LLM and generative AI solutions remain relatively scarce, underscoring the intricate nature of their development and integration. Crafting dependable, secure, and regulatory-compliant AI products requires a deep understanding of the technology, adept integration into existing systems, and innovative approaches to leverage natural language as a powerful interface between humans and computers. CloudApper AI platform is designed to ensure optimal performance by fine-tuning the model and safeguarding the privacy of your data. With a seamless process, corporate data is effortlessly loaded to train Al/LLM automatically. Additionally, defining the user experience becomes intuitive and efficient through a simple drag-and-drop designer, allowing for a tailored approach. Furthermore, our AI platform instantly enriches existing enterprise solutions with advanced AI capabilities, eliminating any development burden and the need for in-house AI expertise.

CloudApper AI: Paving the Way for Seamless Enterprise AI Adoption

Amidst these challenges, CloudApper AI emerges as a transformative solution, offering a comprehensive platform that simplifies enterprise AI solutions. With its user-friendly interface, versatile integration capabilities, and commitment to data security, CloudApper AI empowers organizations to navigate the complexities of AI adoption effortlessly.

Preparing for the AI Revolution

As the AI ecosystem evolves at an unprecedented rate, businesses must be aware and ready to adopt these disruptive technologies. Enterprises can place themselves at the vanguard of the AI revolution by cultivating a culture of continuous learning, adaptation, and judicious risk-taking, with CloudApper AI as their leading partner on this revolutionary path.

CloudApper AI is the catalyst for facilitating smooth and painless corporate AI adoption in the fast developing field of AI.

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions

- Works with

- and more.

Similar Posts

What Experts Say About Enterprise Business Automation & Why You…

Enterprise Automation with AI: How to Streamline Your Business Operations