Table of Contents

AI hiring discrimination tools are transforming talent acquisition at breakneck speed—but with great power comes unprecedented legal liability. As AI recruitment platforms process millions of applications using sophisticated algorithms, a growing number of companies are discovering that their automated hiring systems have become discrimination lawsuits waiting to happen.

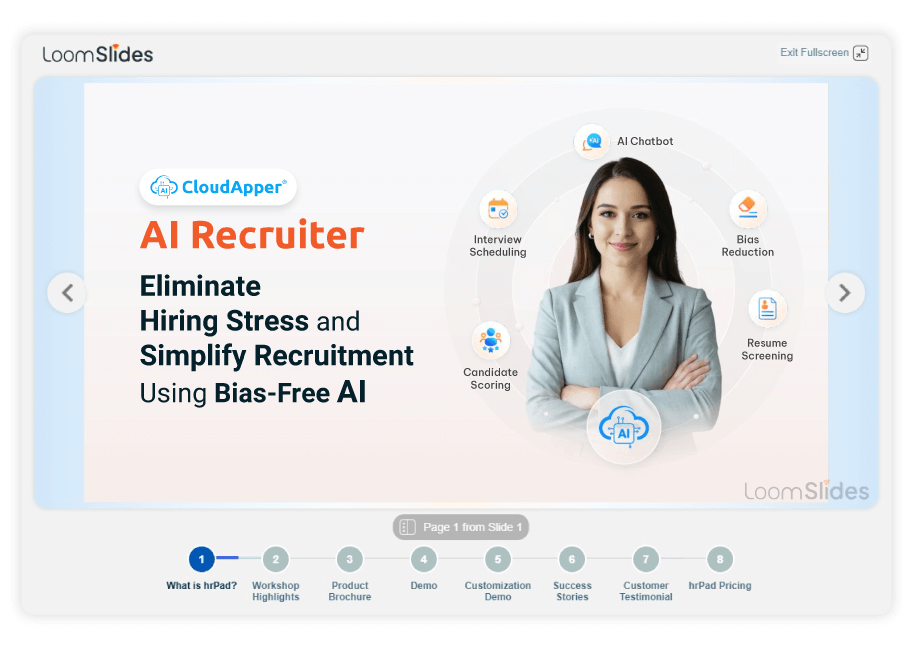

For more information on CloudApper AI Recruiter visit our page here.

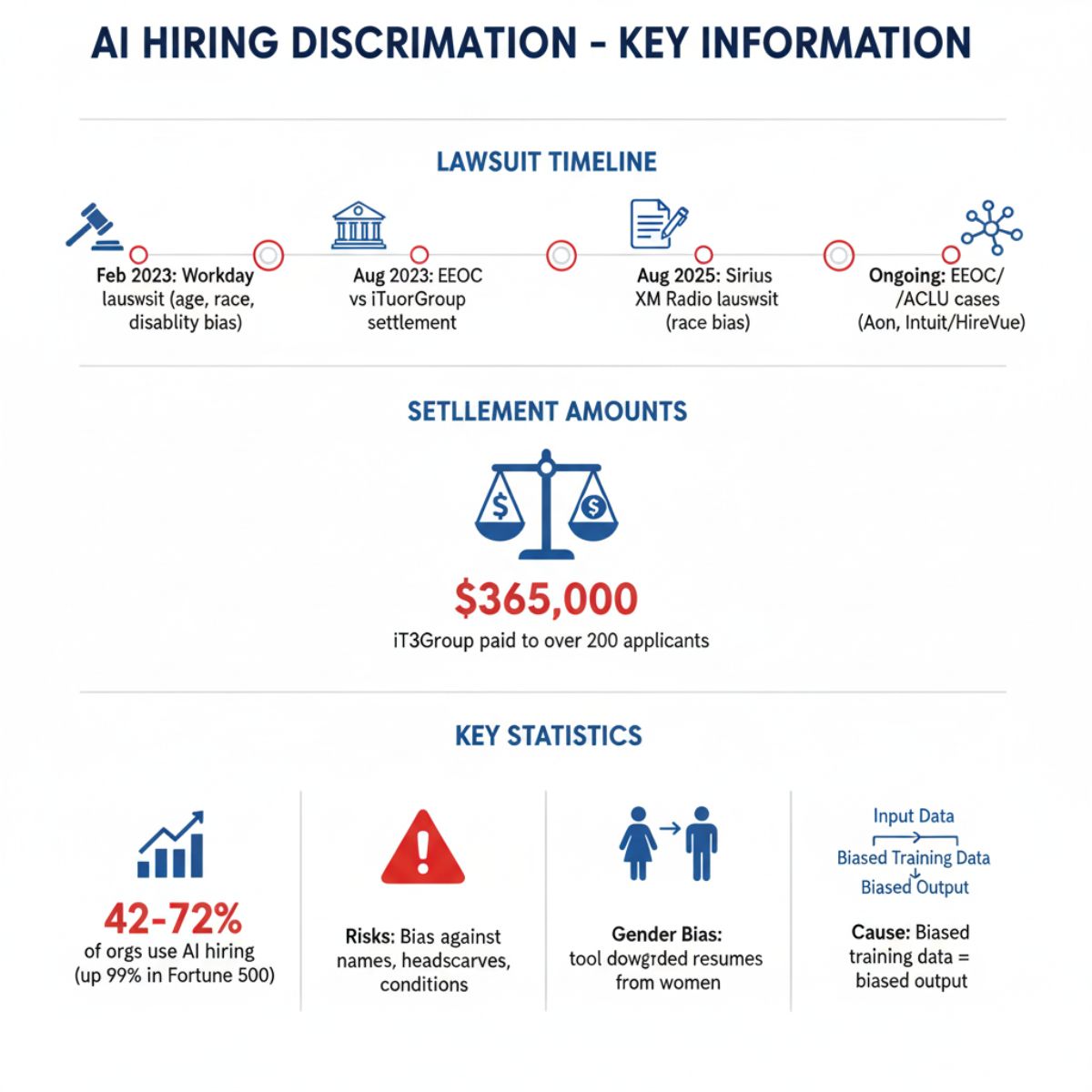

The statistics are alarming: AI bias in hiring has already resulted in the first-ever EEOC settlement of $365,000, with more cases emerging monthly. Machine learning recruitment tools that promised efficiency gains are now delivering legal nightmares to unprepared organizations.

The Monday Morning Nightmare That’s Becoming All Too Real

Sarah Martinez thought she had it all figured out. As VP of Talent at a fast-growing fintech company, she’d successfully implemented an AI-powered recruitment platform that cut screening time by 70% and processed 15,000 applications monthly. The executive team loved the efficiency gains. The recruiting team celebrated faster time-to-hire.

Then came the call from legal.

“Sarah, we need to talk. The EEOC just filed charges. They’re alleging our AI hiring system discriminated against candidates over 40. We’re looking at potential damages in the hundreds of thousands.”

Sarah’s story isn’t unique. It’s happening across boardrooms nationwide as AI hiring tools—deployed without proper safeguards—become legal liabilities that can cost companies their reputation, talent, and massive financial settlements.

When Algorithms Become Courtroom Evidence: Real Cases That Changed Everything

The $365,000 Settlement That Shook the Industry

In 2024, the Equal Employment Opportunity Commission achieved its first-ever settlement in an AI hiring discrimination case. A tutoring company’s AI-powered selection tool automatically rejected women applicants over 55 and men over 60, resulting in a $365,000 settlement.

The devastating detail? The AI learned these biased patterns from historical hiring data, then applied them at scale to thousands of applications. What would have taken human recruiters years to accomplish in terms of discriminatory impact, the AI achieved in months.

The Workday Watershed: When Vendors Become Co-Defendants

Derek Mobley’s class action lawsuit against Workday sent shockwaves through the HR tech industry. A federal judge allowed the suit to proceed as a nationwide class action, alleging that Workday’s AI-based applicant recommendation system violated federal anti-discrimination laws due to disparate impact based on race, age, and disability.

The game-changing aspect? The court ruled that the AI vendor—not just the employer using the tool—could be held liable for discriminatory outcomes. This precedent means AI hiring companies can no longer hide behind “we just provide the technology” defenses.

The HireVue Accessibility Crisis

In March 2025, complaints were filed against Intuit and HireVue over biased AI hiring technology that allegedly works worse for deaf and non-white applicants. The case exposes a critical blind spot in AI development: equal performance across diverse populations.

These cases share a common thread—companies that viewed AI as a “set it and forget it” solution discovered they’d inherited algorithmic bias at unprecedented scale.

The Hidden Epidemic: How AI Amplifies Hiring Bias at Scale

The Multiplication Effect

Traditional hiring bias affected one candidate at a time. AI bias affects thousands simultaneously. Consider TechCorp’s experience:

- Pre-AI Era: Human bias might unfairly reject 10-15 qualified candidates monthly

- AI Implementation: Biased algorithm processed 8,000 applications monthly, systematically filtering out candidates with names suggesting certain ethnic backgrounds

- Six-Month Impact: 2,400 potentially qualified candidates excluded due to algorithmic bias

The math is sobering. AI doesn’t just perpetuate bias—it industrializes it.

The Perfect Storm of 2026

Multiple factors have created an unprecedented risk environment:

Regulatory Acceleration: New York City’s Local Law 144 triggered a domino effect. California’s pending AI hiring regulations and the EU’s AI Act are creating a complex compliance landscape that changes monthly.

Legal Precedent Expansion: Courts are increasingly willing to hold both employers and AI vendors liable for discriminatory outcomes, expanding the scope of potential liability.

Public Awareness Surge: Job seekers now understand AI’s role in hiring decisions and are more likely to challenge unfair outcomes through legal channels.

The True Cost of AI Hiring Discrimination: Beyond Legal Fees

The Financial Avalanche

The visible costs are just the tip of the iceberg:

- Legal Defense: $75,000 average per employment discrimination case

- Settlements: $365,000 (and growing) for AI-specific cases

- Bias Audits: $50,000-$200,000 per AI system evaluation

- Remediation: $100,000+ to fix biased algorithms and retrain systems

The Invisible Business Impact

Talent Pipeline Destruction: When AI systems exclude qualified diverse candidates, companies lose access to 40-60% of available talent pools.

Innovation Deficit: Research consistently shows that diverse teams outperform homogeneous ones by 35% in profitability and 87% in decision-making effectiveness.

Employer Brand Damage: AI discrimination cases generate negative media coverage that can persist for years, deterring top candidates from applying.

Employee Morale Crisis: Current employees question the fairness of their own hiring process when discrimination cases emerge, leading to increased turnover.

The Compliance Wake-Up Call: What Legal Experts Are Telling HR Leaders

The New Legal Reality

Employment attorneys report a surge in AI hiring consultations. The message is consistent: “The legal landscape has fundamentally changed. AI tools are no longer neutral technologies—they’re decision-making systems that must comply with employment law.”

Key legal developments HR leaders must understand:

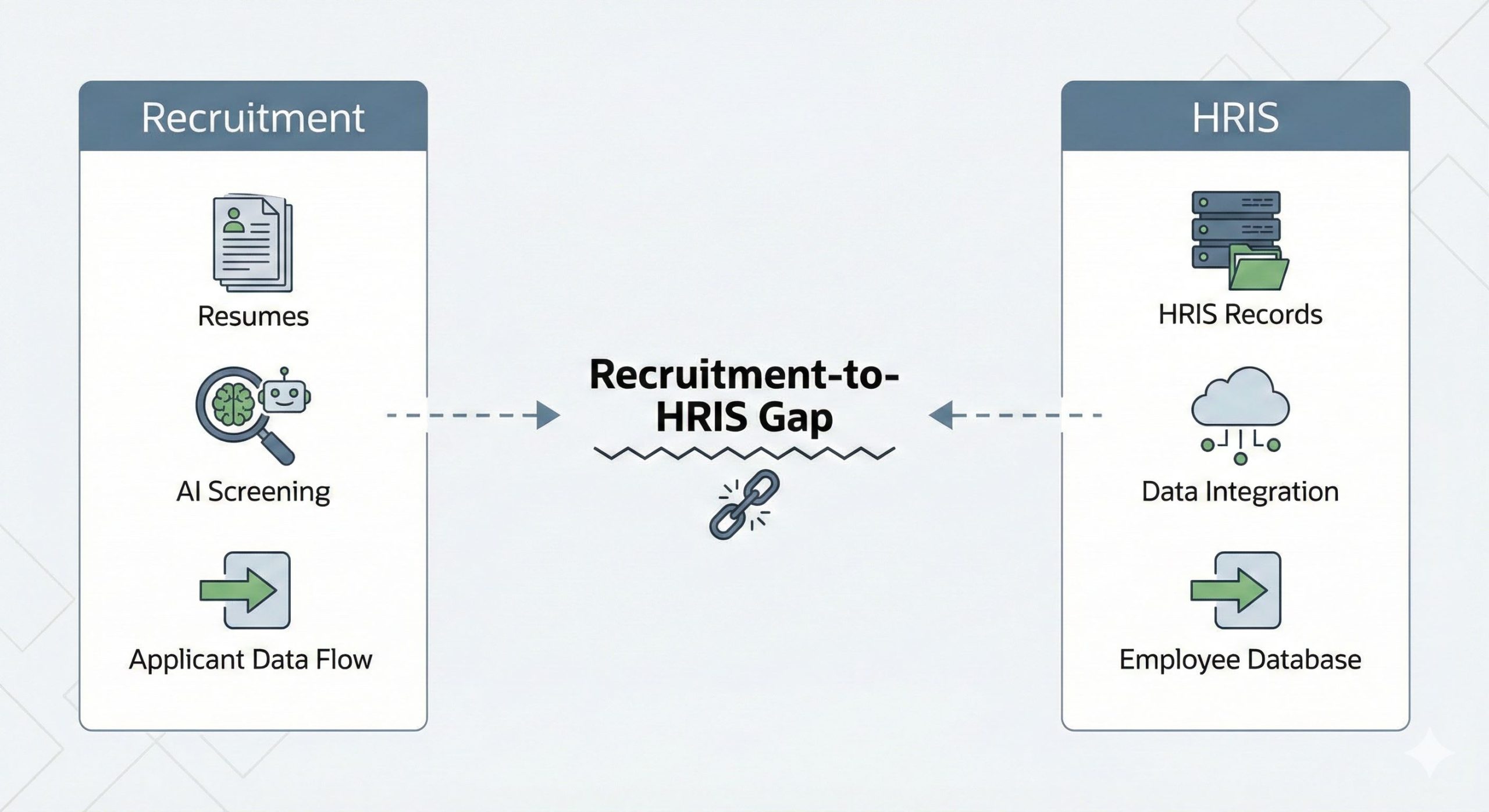

Vendor Liability: AI companies can now be sued directly for discriminatory outcomes Audit Requirements: Bias audits are becoming legally mandatory in multiple jurisdictions Transparency Mandates: Candidates have growing legal rights to understand how AI affects their applications Reasonable Accommodation: AI systems must provide equal access for candidates with disabilities

The Compliance Framework That’s Emerging

Forward-thinking legal teams are implementing five-pillar compliance strategies:

- Pre-Implementation Auditing: Testing AI tools for bias before deployment

- Ongoing Monitoring: Continuous tracking of demographic outcomes

- Documentation Standards: Maintaining audit trails for all AI hiring decisions

- Human Oversight: Ensuring meaningful human involvement in AI-driven processes

- Transparency Protocols: Providing candidates clear information about AI use

The Winning Strategy: Why Compliance-First AI Is Competitive Advantage

The Paradox of Restriction

Companies initially fear that compliance requirements will slow down AI adoption and reduce efficiency gains. The opposite is proving true. Organizations with robust AI governance frameworks are actually achieving better hiring outcomes.

Here’s why:

Bias-Free AI Finds Better Candidates: When AI systems focus purely on job-relevant criteria without demographic bias, they identify qualified candidates that traditional screening misses.

Transparency Builds Trust: Candidates prefer employers who are open about AI use over those who operate in secrecy.

Audit-Ready Systems Run Smoother: AI tools designed for compliance tend to be more reliable and produce more consistent results.

The CloudApper AI Recruiter Advantage: Compliance Built In, Not Bolted On

CloudApper AI Recruiter was architected specifically to address the compliance challenges that are destroying other AI hiring implementations:

Anonymous Evaluation Engine: The system automatically strips identifying information (names, addresses, graduation years, photos) before candidate assessment, focusing purely on qualifications and job-relevant criteria.

Explainable AI Decisions: Every candidate ranking includes clear documentation of the factors that influenced the score, making bias audits straightforward and defensible.

Multi-Jurisdictional Compliance: Built-in flexibility to adapt to New York City’s Local Law 144, California’s pending regulations, EU requirements, and emerging federal standards.

Human-AI Collaboration Interface: Recruiters maintain meaningful control over hiring decisions while leveraging AI insights, ensuring compliance with human oversight requirements.

Real-Time Bias Monitoring: Continuous tracking of demographic outcomes with automated alerts when patterns suggest potential bias issues.

The ROI of Getting Responsible AI Right

Quantified Benefits of Compliance-First AI

Organizations implementing responsible AI hiring practices report measurable improvements:

- 25% improvement in candidate quality through bias-free evaluation

- 40% increase in diverse hiring without sacrificing qualifications

- 60% reduction in time-to-hire while maintaining compliance standards

- Zero legal challenges related to AI hiring discrimination

The Competitive Moat

As bias audits become standard practice in vendor selection, AI solutions that can pass rigorous compliance evaluations are capturing disproportionate market share. Industry analysts predict that by Q4 2026, compliance capabilities will be the primary differentiator in AI hiring tool selection.

Don’t Wait for Your $365,000 Wake-Up Call

Every day you operate AI hiring tools without proper safeguards is another day of potential liability. The legal precedents are set. The regulations are expanding. The question isn’t whether compliance requirements will reach your organization—it’s whether you’ll be prepared when they do.

Take action now: Implement AI hiring solutions designed from the ground up for transparency, fairness, and regulatory compliance. Your legal team, your candidates, and your bottom line will thank you.

Ready to Transform Your Hiring with Responsible AI?

CloudApper AI Recruiter provides the power of advanced AI recruitment with built-in compliance safeguards that protect your organization from legal risks while improving hiring outcomes.

Schedule your compliance-focused demo today and discover why industry leaders choose AI solutions designed for the regulated world we live in.

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More