AI hiring transparency is now a legal requirement. This guide explains Illinois HB 3773 and Texas TRAIGA, what employers must disclose, how bias audits work, and how HR teams can stay compliant without slowing hiring.

Table of Contents

AI is no longer a background tool in hiring. In 2026, it is a regulated decision-support system with legal consequences. Employers across the United States are now required to understand how AI influences hiring decisions, disclose its use, and ensure it does not create discriminatory outcomes. This shift is commonly referred to as the AI compliance cliff, and it is driven by new state-level mandates focused on AI hiring transparency.

Two laws are shaping this new reality: Illinois House Bill 3773 and the Texas Responsible AI Governance Act (TRAIGA). While they differ in scope, both signal a clear direction for employers. If AI is used in hiring, it must be explainable, auditable, and defensible.

This article explains what AI hiring transparency means in practice, how these laws affect employers, and how HR teams can comply without slowing down hiring operations.

What is AI hiring transparency?

AI hiring transparency means employers must clearly disclose when AI is used in hiring decisions and be able to explain how that AI influences outcomes. This includes tools that screen resumes, rank candidates, ask automated screening questions, or assist recruiters in decision-making.

Transparency does not require employers to reveal proprietary algorithms. It requires them to understand and document:

-

What the AI does

-

What data it uses

-

Where it influences decisions

-

How bias risks are monitored and controlled

What does Illinois HB 3773 require from employers using AI?

Illinois HB 3773 amends the Illinois Human Rights Act and takes effect January 1, 2026. It focuses on AI hiring disclosure and discriminatory impact, not just intent.

Under this law:

-

Employers must notify candidates when AI is used in employment-related decisions

-

Employers may not use AI that results in discriminatory outcomes against protected classes

-

Liability can exist even if discrimination is unintentional

This is why phrases like black box AI ban in Illinois hiring are appearing in compliance discussions. While the law does not use that phrase explicitly, its effect is clear. If an AI system cannot be audited or explained, it becomes difficult to defend.

For HR teams, Illinois HB 3773 shifts compliance from policy language to operational reality. It is no longer enough to trust that a vendor’s AI is fair. Employers must be able to show it.

Does Texas TRAIGA apply to private-sector hiring?

Texas TRAIGA takes a different approach. It establishes statewide rules for responsible AI use and enforcement mechanisms, but it is more limited than early proposals suggested.

For HR teams, the key question is not whether TRAIGA mirrors Illinois exactly. The real question is whether AI systems used in hiring could materially affect a person’s access to opportunity. If they do, transparency and documentation become essential.

This is why Texas Responsible AI Act HR compliance is now part of vendor evaluation conversations. Even when laws are narrower, enforcement trends are moving toward accountability.

Are black box AI hiring tools allowed?

In practice, black box AI hiring tools are becoming a liability. Even when not explicitly banned, systems that cannot explain their outputs create compliance risk.

Employers are increasingly expected to demonstrate:

-

What factors influenced AI recommendations

-

Whether protected characteristics were excluded

-

How outcomes are monitored for bias

This is where explainable AI hiring tools become critical. Explainability allows HR teams to respond to audits, candidate inquiries, and internal reviews without relying on vague assurances.

What bias audit requirements apply to AI recruitment in 2026?

Bias audits are central to AI recruitment compliance in 2026. Illinois in particular focuses on discriminatory effects, which means outcomes matter more than intent.

A practical bias audit approach includes:

-

Reviewing selection rates across protected groups

-

Monitoring changes when screening criteria or models change

-

Documenting remediation steps when disparities appear

These bias audit requirements for AI recruitment do not require advanced data science teams. They require consistency, documentation, and accountability.

How can employers avoid AI hiring fines in 2026 ?

Avoiding fines starts with understanding where AI touches your hiring process. Most compliance failures happen because AI is embedded quietly, not because employers acted recklessly.

Effective 2026 AI hiring fine avoidance strategies include:

-

Mapping AI usage across the hiring workflow

-

Implementing clear AI hiring disclosure notices

-

Choosing vendors that support auditability

-

Keeping human oversight in decision-making

Transparency reduces risk because it removes surprises. Regulators and courts respond more favorably to documented processes than to unexplained automation.

How should HR teams evaluate AI recruiting vendors for compliance?

Vendor selection is now a compliance decision. HR teams should evaluate whether vendors support AI hiring transparency, not just efficiency.

A strong vendor AI audit compliance checklist for 2026 should ask:

-

Can the vendor explain how their AI evaluates candidates?

-

Does the system log decisions and changes?

-

Can outcomes be reviewed by job, location, or demographic group?

-

Is human review part of the workflow?

This is where compliance credibility is built.

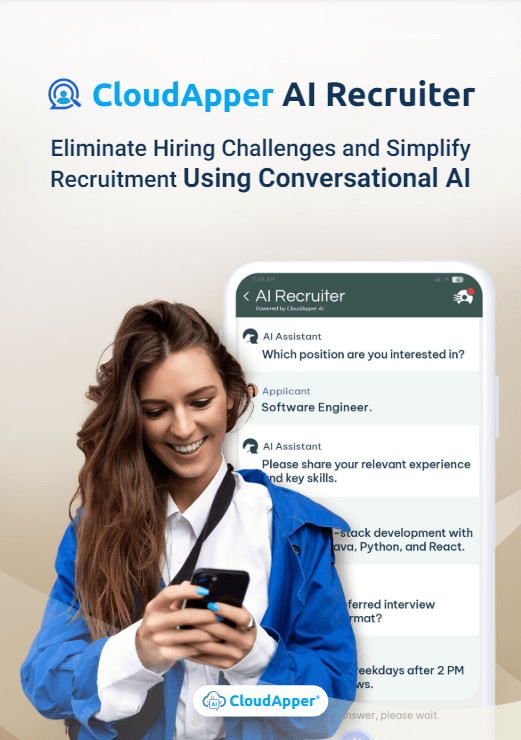

Where CloudApper AI Recruiter fits into AI hiring transparency

CloudApper AI Recruiter is best understood as a system that helps operationalize transparency rather than replace human judgment.

From a compliance standpoint, its value lies in:

-

Standardized, conversational candidate screening that can be disclosed clearly

-

Structured qualification assessment instead of opaque filtering

-

Integration with existing ATS and HCM systems, preserving a single system of record

-

Documented workflows that reduce ad-hoc decision-making

Because CloudApper AI Recruiter supports consistent screening and recruiter oversight, it aligns well with explainable AI hiring requirements. It does not rely on hidden scoring logic that recruiters cannot interpret, which is critical when responding to audits or candidate inquiries.

For employers navigating Illinois HB 3773 AI hiring disclosure requirements, this kind of structured transparency reduces operational risk without adding unnecessary steps.

What AI hiring transparency means for HR leaders going forward

AI hiring transparency is not a one-time compliance project. It is an ongoing operating model. Laws will continue to evolve, but the direction is set.

Employers that treat transparency as a foundation rather than a burden will be better positioned to:

-

Maintain hiring speed

-

Build candidate trust

-

Respond confidently to audits

-

Reduce long-term legal exposure

In 2026 and beyond, the riskiest hiring stack is not the one that uses AI. It is the one that cannot explain itself.

Frequently Asked Questions

What does Illinois HB 3773 require for AI hiring disclosure in 2026?

Illinois HB 3773 requires employers to provide clear AI hiring disclosure when AI is used to support hiring decisions. In practice, you should tell candidates when AI is involved in screening or evaluation, document where AI influences decisions, and be prepared to show that the process does not create discriminatory outcomes.

Does Texas TRAIGA apply to private employers using AI in hiring?

Texas TRAIGA focuses on responsible AI governance and enforcement across certain AI uses. If you are a private employer, the safest approach is to treat AI hiring transparency and documentation as required operational controls, especially when AI could materially influence hiring outcomes or candidate access to opportunity.

Is there a black box AI ban for Illinois hiring tools?

Illinois does not use the phrase “black box AI ban” in a simple one-line rule, but the practical risk is the same. If you cannot explain or audit how an AI hiring tool influences decisions, it becomes difficult to defend under Illinois HB 3773 because you may not be able to prove the system is not driving discriminatory outcomes.

What counts as AI use in hiring decisions for employers?

AI use in hiring decisions can include resume screening algorithms, candidate ranking, automated interview scheduling decisions, chatbot-based pre-screening, and any tool that recommends, filters, or prioritizes candidates. If an AI system influences who moves forward in the process, you should treat it as in-scope for AI hiring transparency requirements.

What are the bias audit requirements for AI recruitment in 2026?

Bias audit requirements for AI recruitment in 2026 typically mean reviewing outcomes to ensure the AI-supported process does not disproportionately screen out protected groups. A practical bias audit includes checking selection rates by job family, monitoring changes after workflow updates, and documenting corrective actions when disparities appear.

How often should employers conduct AI hiring bias audits?

Many employers choose a quarterly review cadence for AI hiring bias audits, with additional reviews when they change screening criteria, update models, adjust scoring rules, or introduce new hiring workflows. The best schedule is one you can follow consistently and document clearly.

How can employers comply with AI hiring transparency laws without slowing hiring?

You can comply with AI hiring transparency laws by mapping where AI touches your hiring workflow, adding plain-language AI hiring disclosure notices in the application flow, standardizing screening questions, and using vendor tools that provide auditable records. The goal is to make transparency part of the process rather than an extra step.

What should an AI recruiting vendor audit compliance checklist include in 2026?

A vendor AI audit compliance checklist in 2026 should include whether the vendor can explain how the AI evaluates candidates, what data fields it uses, how changes are logged, how outcomes can be reviewed for bias, and whether recruiters can override or review AI outputs. You should also confirm how records are retained for compliance requests.

What are the best 2026 AI hiring fine avoidance strategies for HR teams?

The best 2026 AI hiring fine avoidance strategies include documenting AI use across the hiring process, delivering consistent AI hiring disclosure notices, running regular outcome reviews, and selecting explainable AI hiring tools vendors that support audit trails. The more you can show your process, the lower your risk when questions arise.

How do explainable AI hiring tools help employers meet compliance requirements?

Explainable AI hiring tools help employers meet compliance requirements by making it possible to describe what factors influenced a recommendation, how the process is monitored, and how recruiters maintain oversight. This reduces reliance on vague vendor claims and improves your ability to respond to audits or candidate inquiries.

How does CloudApper AI Recruiter support auditable AI recruiting workflows?

CloudApper AI Recruiter can support auditable AI recruiting workflows by standardizing pre-screening interactions, keeping candidate communication consistent, and integrating with your ATS or HCM system so the hiring record remains centralized. This makes AI hiring transparency easier to operationalize because you can document what was asked, what was captured, and how recruiters guided next steps.

What is the difference between AI hiring transparency and AI hiring compliance?

AI hiring transparency focuses on disclosure and explainability, meaning you can clearly communicate when AI is used and how it influences decisions. AI hiring compliance includes transparency plus outcome monitoring, bias auditing practices, documentation, and vendor governance so the process can be defended if challenged.

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions for HR

- Works with

- and more.

Similar Posts

How cNPS Reflects Your Recruitment and Employer Brand

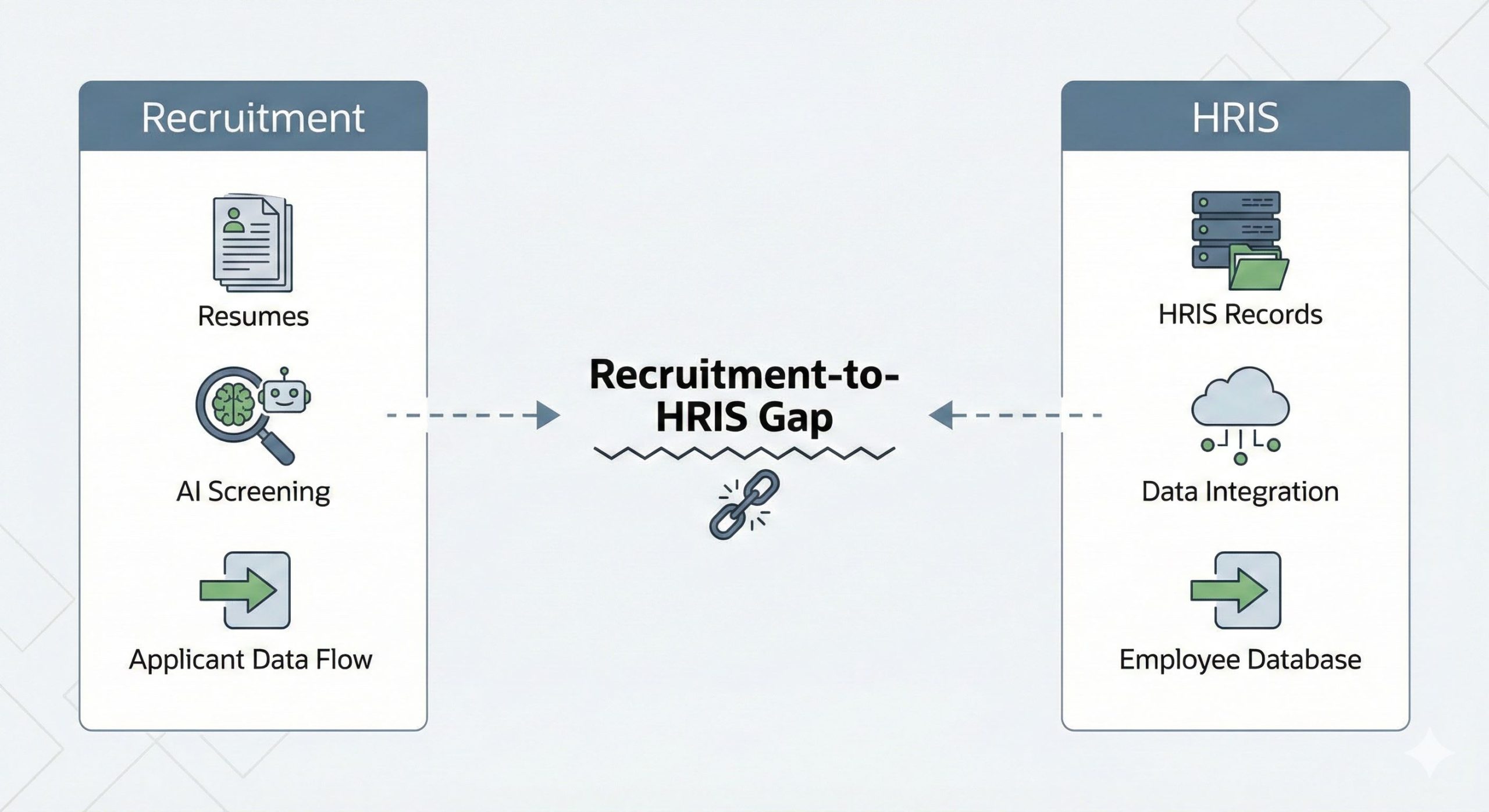

The Recruitment-to-HRIS Gap: Why Your “System of Record” Should Start…