Standard AI resume screeners often disadvantage frontline candidates lacking polished resumes or keywords. CloudApper’s conversational AI eliminates bias by assessing skills through natural chat/SMS interviews, ensuring fair evaluation. Result: 76% faster hiring, 6x more applicants, reduced unconscious bias, and stronger, more diverse teams.

Table of Contents

Your AI resume screening tool just rejected 200 qualified candidates. The nursing assistant with 15 years of experience didn’t list “Microsoft Office” on her resume. The retail associate who thrived at Target for five years had a two-month employment gap. The manufacturing operator with perfect safety records worked at a company your algorithm didn’t recognize. All excellent candidates. All filtered out before a human ever saw them.

This is automation bias in action, and it’s quietly undermining hiring quality across healthcare, retail, and manufacturing organizations that have embraced AI-powered resume screening. While these tools promise efficiency gains for high-volume hiring, they’re simultaneously creating a dangerous over-reliance on algorithmic decisions that don’t account for the nuanced reality of frontline work.

For talent acquisition professionals managing constant hiring needs across multiple locations, understanding and mitigating automation bias isn’t just an ethical consideration—it’s a competitive necessity that directly impacts your ability to build strong frontline teams.

TL;DR

Traditional AI resume screening tools rely on keywords and formatting, creating bias against hourly workers with non-traditional backgrounds. CloudApper AI Recruiter uses conversational screening via SMS/web chat to evaluate actual skills and experience fairly—reducing bias, cutting time-to-hire by 76%, expanding applicant pools 6x, and delivering better, more inclusive hires for frontline roles.

Understanding Automation Bias in Resume Screening

Automation bias occurs when humans place excessive trust in automated systems, accepting algorithmic recommendations without adequate scrutiny or critical evaluation. In recruitment, this manifests when recruiters and hiring managers defer to AI screening decisions without questioning whether the system’s logic aligns with actual job success factors.

The psychology is understandable. When you’re processing 500 applications per week for certified nursing assistant positions across ten facilities, AI screening feels like a lifeline. The algorithm works tirelessly, never complains, and produces neat lists of “qualified” and “unqualified” candidates. Over time, recruiters begin to trust these recommendations implicitly, rarely questioning why specific candidates were rejected or whether the criteria truly predict performance.

But here’s the problem: most AI resume screening tools were designed with corporate, white-collar hiring in mind. They prioritize factors like prestigious university degrees, linear career progression, specific keywords, and recognizable company names—criteria that have minimal correlation with success in frontline roles.

A retail associate doesn’t need a bachelor’s degree to provide exceptional customer service. A manufacturing operator’s ability to maintain safety protocols isn’t reflected in whether they worked at Fortune 500 companies. A nursing assistant’s compassion and work ethic won’t appear as searchable keywords on a resume. Yet traditional AI screening algorithms penalize candidates who don’t fit corporate-centric patterns, systematically filtering out people who would excel in frontline positions.

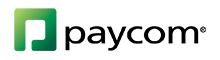

The Hidden Costs of Automation Bias from Resume Screening with AI

The consequences of unchecked automation bias extend far beyond individual hiring mistakes. They compound into systemic problems that undermine organizational performance and competitive positioning.

Narrowed Talent Pools: When AI systems filter aggressively based on inappropriate criteria, you lose qualified candidates before ever engaging with them. In tight labor markets where frontline talent is already scarce, artificially narrowing your pool by 40-60% through biased screening is self-sabotage. You’re competing with one hand tied behind your back.

Perpetuated Inequity: AI algorithms trained on historical hiring data often encode and amplify existing biases. If your organization has historically hired from certain neighborhoods, schools, or demographic groups, the AI learns to favor candidates matching those patterns—even when those patterns don’t predict job performance. This perpetuates homogeneous workforces and excludes diverse talent who could bring fresh perspectives and skills.

Missed Non-Traditional Candidates: Career changers, workers returning after caregiving responsibilities, candidates without formal education but with relevant lived experience, and people from underrepresented communities often have non-linear resumes that confuse traditional screening algorithms. These candidates frequently become exceptional frontline workers precisely because of their diverse backgrounds and resilience, yet automation bias systematically excludes them.

Recruiter Skill Atrophy: Over-reliance on automated screening diminishes recruiters’ ability to evaluate candidates holistically. When the AI always makes the first cut, recruiters lose practice in recognizing potential, identifying transferable skills, and seeing beyond surface-level qualifications. This creates dangerous dependency—if the AI fails or makes poor recommendations, recruiters lack the skills to compensate.

Poor Quality of Hire: Perhaps most damaging, automation bias leads to hiring people who look good on paper but lack the practical skills, cultural fit, or personal attributes that drive success in frontline roles. You fill positions, but turnover remains high because the algorithm optimized for the wrong outcomes.

Legal and Compliance Risks: As regulatory scrutiny of AI hiring tools intensifies, organizations face increasing legal exposure when their screening systems produce discriminatory outcomes—even unintentionally. Automation bias compounds this risk because recruiters who blindly trust AI recommendations may not recognize when the system is making problematic decisions.

Why Frontline Roles Demand Different Approaches

Frontline hiring fundamentally differs from corporate recruiting in ways that expose the limitations of traditional AI screening:

Skills Over Credentials: Success in healthcare support roles, retail positions, and manufacturing jobs depends primarily on practical skills, work ethic, reliability, and interpersonal abilities—not educational pedigree or corporate brand names. Yet resume screening AI typically prioritizes credentials because they’re easy to parse and quantify.

High-Volume, Continuous Hiring: With annual turnover rates often exceeding 50-60% in frontline positions, healthcare, retail, and manufacturing organizations maintain perpetual hiring pipelines. This volume makes thorough human review of every application impractical, creating pressure to rely heavily on automation—which amplifies the impact of automation bias.

Diverse Candidate Backgrounds: Frontline applicants often include career changers, recent immigrants, workers without college degrees, people re-entering the workforce, and candidates with non-traditional employment histories. Traditional AI screening tools weren’t designed to evaluate these profiles fairly, leading to systematic exclusion of viable candidates.

Local Labor Market Realities: Frontline hiring typically draws from local talent pools with specific characteristics, constraints, and opportunities. Generic AI models trained on national datasets miss these nuances, applying inappropriate filters that don’t reflect your actual candidate market.

Practical Assessment Needs: Determining whether someone will excel as a nursing assistant, retail associate, or production worker requires evaluating factors that rarely appear on resumes: customer service orientation, ability to follow procedures, physical stamina, schedule flexibility, and cultural alignment. Resume screening—whether human or AI-powered—provides limited signal on these critical dimensions.

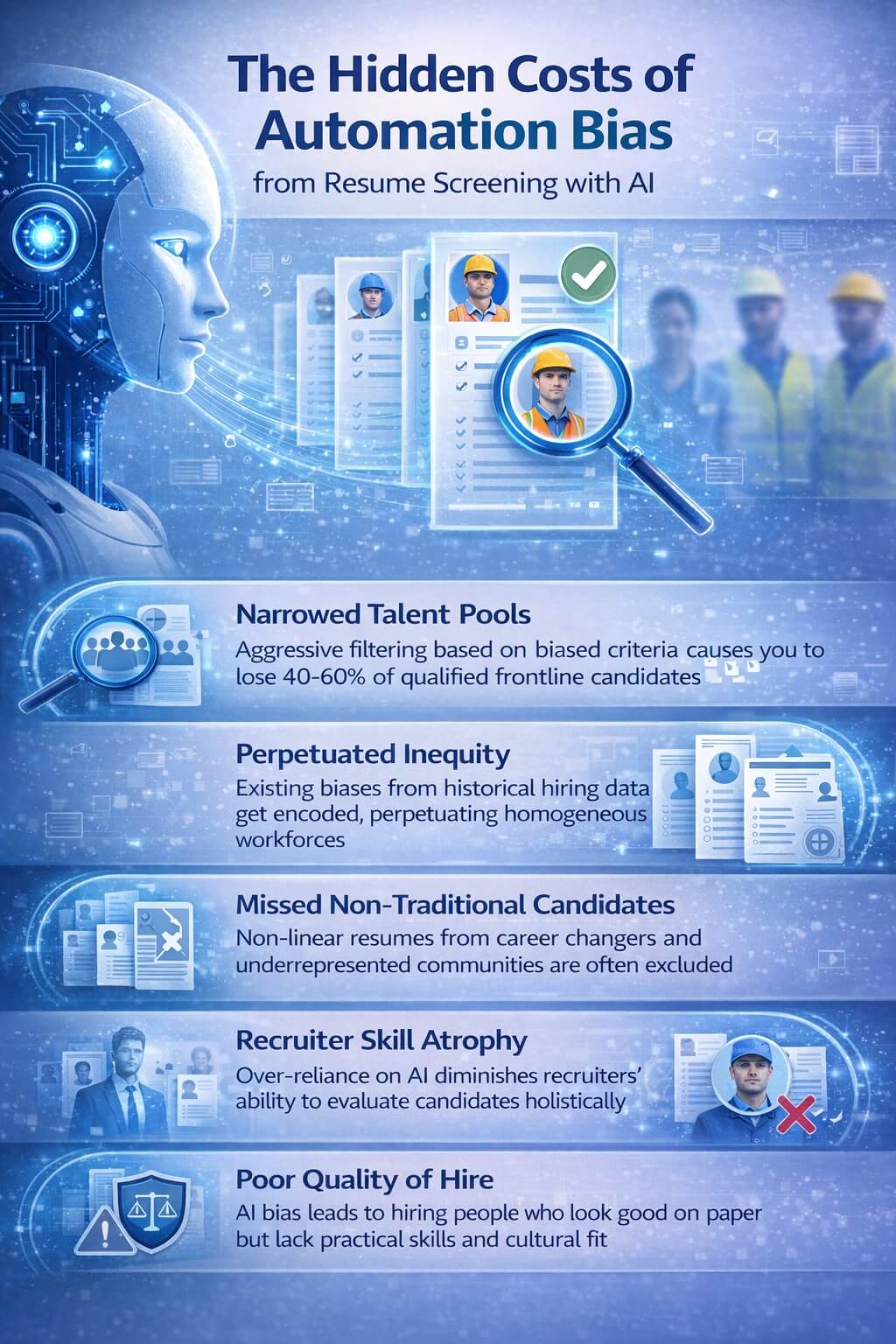

Strategies for Reducing Automation Bias

Recognizing automation bias is the first step. Actively mitigating it requires deliberate strategies and better technology choices.

Audit Your Screening Criteria: Regularly review what your AI system prioritizes. Are educational requirements truly necessary for the role? Do employment gaps actually predict poor performance in your context? Are you filtering for company name recognition when it’s irrelevant to job success? Challenge every criterion and eliminate those that don’t demonstrably correlate with performance.

Measure Disparate Impact: Analyze whether your AI screening produces different pass-through rates for different demographic groups. If certain populations are filtered out at significantly higher rates, investigate whether your criteria inadvertently encode bias. Many screening tools that seem neutral on the surface produce dramatically different outcomes across groups.

Maintain Human Oversight: Never fully automate screening decisions. Establish workflows where humans review AI recommendations, especially for edge cases and rejected candidates who meet most criteria. Train recruiters to question algorithmic decisions and trust their professional judgment.

Focus on Skills, Not Proxies: Rather than screening for credentials that supposedly indicate skills, assess skills directly. Use pre-employment assessments, practical simulations, or structured screening questions that evaluate actual job-relevant capabilities. This approach reduces reliance on resume parsing entirely.

Expand Your Definition of “Qualified”: Resist the temptation to set AI screening filters too narrowly. If you’re hiring retail associates, do you really need two years of retail experience, or would one year plus demonstrated customer service skills work? Overly restrictive filters, even if they reduce volume, eliminate strong candidates unnecessarily.

Choose Purpose-Built Solutions: Generic resume screening AI built for corporate hiring will inevitably struggle with frontline roles. Seek solutions specifically designed for high-volume hourly hiring that understand the unique characteristics and success factors of healthcare, retail, and manufacturing positions.

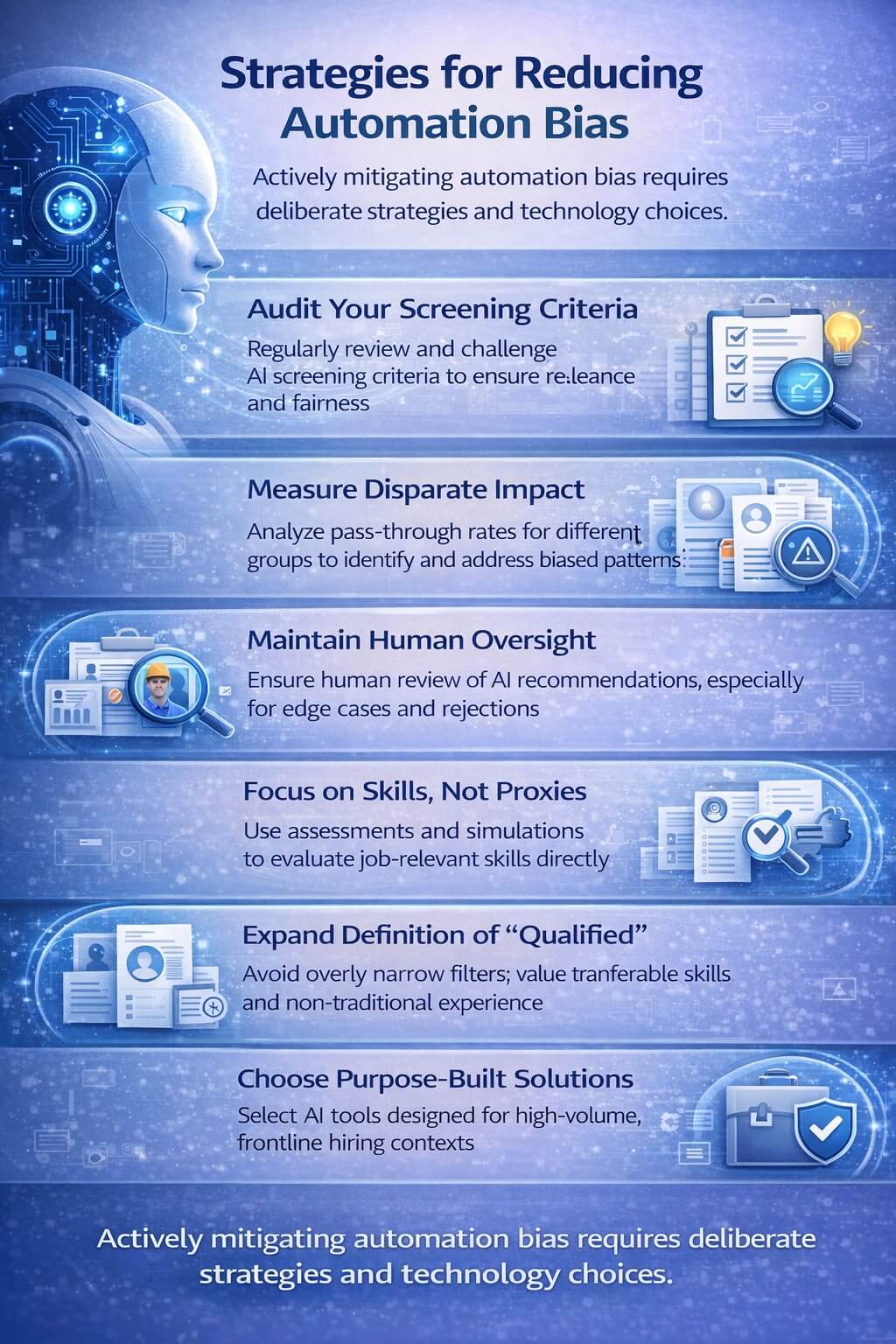

How CloudApper AI Recruiter Addresses Automation Bias

CloudApper AI Recruiter takes a fundamentally different approach to candidate evaluation that directly addresses the automation bias challenges plaguing traditional resume screening systems.

Rather than relying primarily on resume parsing and keyword matching, CloudApper uses conversational AI to engage candidates directly through text message interactions. This methodology shifts evaluation from static credentials to dynamic conversations that reveal practical qualifications, work preferences, and genuine fit.

When candidates interact with CloudApper AI Recruiter, they answer relevant screening questions through natural text exchanges. The system can assess availability, location, certifications, experience level, and other job-critical factors without the resume-centric bias that penalizes non-traditional candidates. A nursing assistant with 15 years of experience proves their qualifications through conversation, regardless of how polished their resume might be.

The platform allows organizations to design screening flows that prioritize what actually matters for frontline roles: relevant experience (not prestigious credentials), practical skills (not educational pedigree), schedule availability (not employment chronology), and genuine interest (not resume optimization). By evaluating candidates through job-relevant questions rather than credential proxies, CloudApper reduces the automation bias inherent in traditional screening.

Crucially, CloudApper maintains human judgment at the center of hiring decisions. The AI handles initial qualification assessment and administrative tasks, but recruiters review candidate responses, evaluate context, and make final determinations. This hybrid approach captures efficiency gains from automation while preserving the critical thinking that prevents bias from going unchecked.

The system also provides transparency into screening decisions. Recruiters can see exactly why candidates passed or failed screening, which responses triggered different outcomes, and how evaluation criteria are being applied across the candidate pool. This visibility enables continuous refinement of screening logic and helps identify when automation bias might be creeping into the process.

For organizations hiring across multiple locations, CloudApper ensures consistency in screening while allowing customization for local market realities. You can maintain standardized qualification assessment while adjusting specific criteria to reflect regional labor market conditions, preventing the one-size-fits-all approach that amplifies bias in traditional systems.

The Benefits of Bias-Aware AI Recruiting

When organizations actively address automation bias in their screening processes, the benefits extend across all aspects of talent acquisition:

Larger Qualified Talent Pools: By eliminating inappropriate filters and credential-based bias, you engage more candidates who can actually succeed in your roles. This expanded pool gives you more options, reduces time-to-fill, and improves negotiating position.

Improved Quality of Hire: Focusing on job-relevant factors rather than resume proxies leads to better hiring decisions. You select candidates based on what predicts success in your specific environment, not what correlates with corporate success in general.

Enhanced Diversity: Reducing automation bias naturally increases diversity because you stop systematically filtering out candidates from underrepresented backgrounds who may have non-traditional profiles. This diversity strengthens teams and brings varied perspectives that improve problem-solving.

Faster Hiring: Eliminating unnecessarily restrictive screening criteria means more candidates advance quickly through your pipeline. You spend less time sourcing because qualification rates improve, and you fill positions faster because your effective candidate pool expands.

Better Recruiter Development: When humans remain actively involved in evaluation rather than deferring entirely to algorithms, recruiters continuously develop their judgment and assessment skills. This creates more capable teams that can adapt when technology changes or fails.

Reduced Legal Risk: Thoughtful management of AI screening reduces discriminatory outcomes and demonstrates commitment to fair hiring practices. As regulations around AI employment tools tighten, proactive bias mitigation provides legal protection.

Stronger Employer Brand: Candidates notice when screening processes feel fair, inclusive, and focused on genuine qualifications rather than arbitrary credentials. This positive experience enhances your reputation in local labor markets where word-of-mouth significantly impacts application volume.

Frequently Asked Questions

Q: Isn’t some automation bias inevitable when using AI for screening? Why not just use human-only review?

A: While completely eliminating bias (human or automated) is impossible, the goal is managing it to acceptable levels. Pure human review at high volumes creates different problems: inconsistent evaluation, recruiter burnout, slow response times, and human biases that can be equally problematic. The solution isn’t abandoning AI but choosing systems designed to minimize bias and maintaining appropriate human oversight. Hybrid approaches that combine AI efficiency with human judgment offer the best outcomes.

Q: How do we know if our current AI screening system has automation bias problems?

A: Start by analyzing your data. Look at pass-through rates across different demographic groups—significant disparities suggest bias. Review samples of rejected candidates to see if qualified people are being filtered out. Interview hiring managers about whether screened candidates truly fit job requirements. Survey your recruiting team about how much they trust versus question AI recommendations. If recruiters rarely override the system or can’t explain why candidates were rejected, automation bias is likely present.

Q: Won’t reducing screening strictness just increase our workload by advancing too many candidates?

A: This is a common concern, but the reality is nuanced. Yes, overly permissive screening increases volume. However, overly restrictive screening causes you to miss strong candidates and extends time-to-fill, which has its own costs. The solution is finding the right balance: screen for truly predictive factors while eliminating credential-based proxies that don’t correlate with performance. Well-designed systems advance more qualified candidates without flooding recruiters with unqualified applicants.

Q: Our AI vendor says their algorithm is “unbiased.” Should we trust that claim?

A: Be skeptical of claims about “unbiased” AI. All algorithms encode the assumptions and priorities of their creators, and all systems trained on historical data risk perpetuating historical patterns. Instead of accepting vendor assurances, demand transparency: How was the algorithm trained? What factors does it prioritize? Has it been tested for disparate impact? Can you audit its decisions? Responsible vendors welcome these questions; evasive responses are red flags.

Q: How do we balance efficiency needs with bias concerns when processing hundreds of applications weekly?

A: This is the core challenge of high-volume frontline hiring. The answer lies in strategic automation: use AI for administrative tasks (scheduling, communication, qualification verification) while maintaining human judgment on evaluation and decision-making. Conversational AI that assesses candidates through relevant questions rather than credential parsing can achieve both efficiency and fairness. The key is choosing technology designed for your specific use case rather than adapting corporate-focused tools.

Q: What should we do if we discover our current system has been rejecting qualified candidates due to automation bias?

A: First, don’t panic—this is fixable. Start by auditing and adjusting your screening criteria to focus on job-relevant factors. Review recently rejected candidates to identify strong applicants who can be re-engaged. Train your recruiting team on recognizing and questioning automated recommendations. Consider whether your current technology is appropriate for frontline hiring or if you need a purpose-built solution. Document your corrective actions for compliance purposes. Most importantly, establish ongoing monitoring to catch bias issues before they compound.

Moving Beyond Resume-Centric Screening

The resume was never designed to predict success in frontline roles. It emerged as a tool for corporate hiring where credentials, career trajectory, and institutional affiliations serve as useful proxies for capability. But frontline work operates by different rules, rewards different attributes, and draws from different talent pools.

Automation bias in resume screening persists because we’ve tried to force AI built for one hiring paradigm into a completely different context. The solution isn’t abandoning recruitment automation—the volume demands are too great—but rather adopting approaches purpose-built for frontline hiring realities.

For talent acquisition professionals and HR leaders in healthcare, retail, and manufacturing, the imperative is clear: scrutinize your screening processes for automation bias, maintain human judgment at the center of hiring decisions, and choose technologies that evaluate candidates based on what truly matters for your roles.

The organizations that master this balance will build stronger frontline teams, reduce turnover, improve diversity, and gain competitive advantage in increasingly tight labor markets. Those that blindly trust biased algorithms will continue losing strong candidates to competitors who see potential where automation sees only imperfect resumes.

To learn more about how CloudApper AI Recruiter’s conversational approach reduces automation bias while maintaining screening efficiency, visit https://www.cloudapper.ai/ai-recruiter-conversational-chatbot/

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions for HR

- Works with

- and more.

Similar Posts

How cNPS Reflects Your Recruitment and Employer Brand

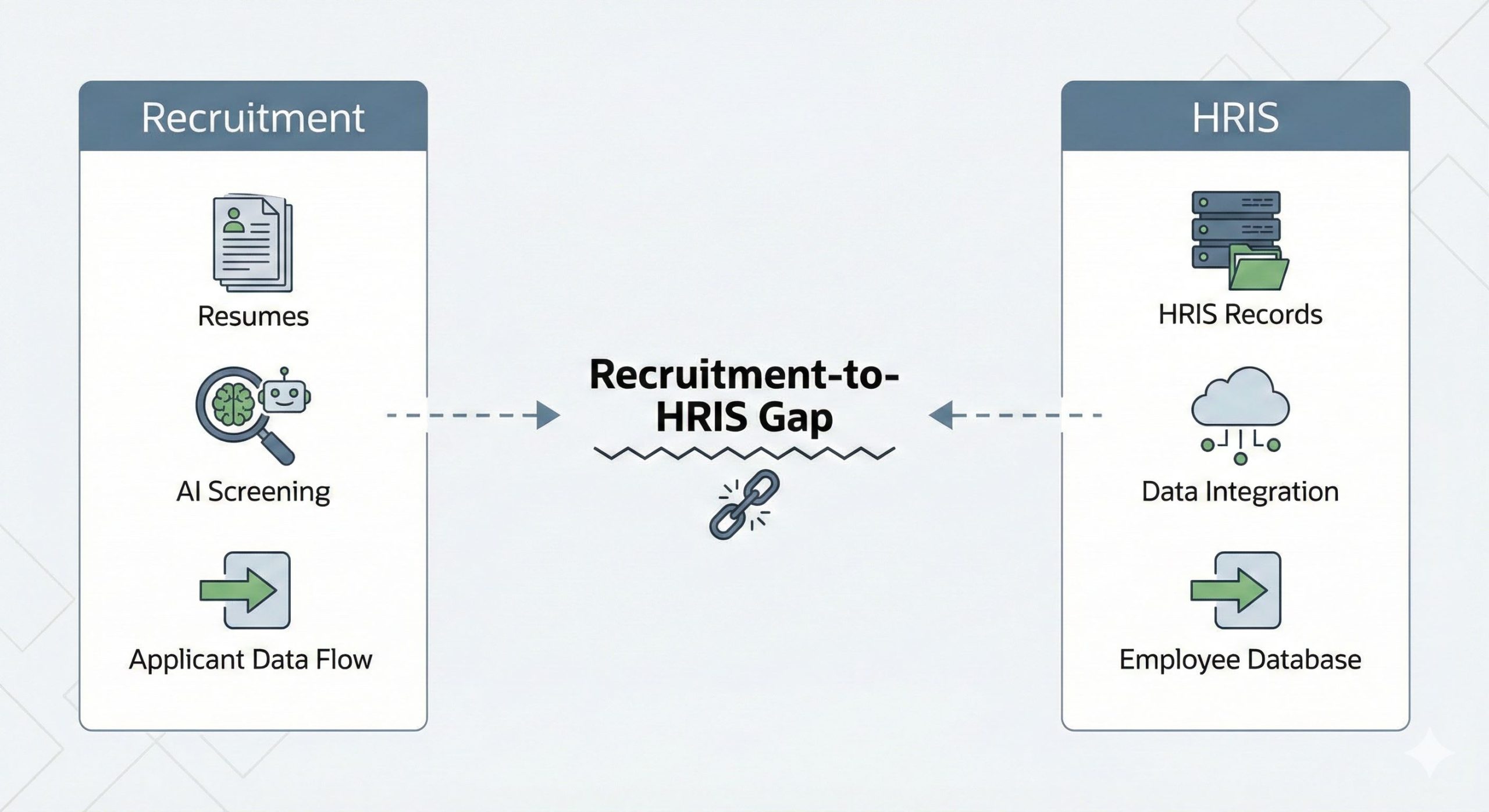

The Recruitment-to-HRIS Gap: Why Your “System of Record” Should Start…