AI recruitment audits are no longer optional as hiring decisions become increasingly automated. This guide explains how a HITL framework creates clear intervention points—data validation, bias overrides, and final decision gates—so organizations can scale AI hiring responsibly without losing human accountability.

Table of Contents

AI is now deeply embedded in modern recruitment. From resume screening to candidate ranking, algorithms influence who moves forward and who does not. As adoption accelerates, so does scrutiny. Regulators, legal teams, and HR leaders are asking a more mature question: not whether AI is used in hiring, but how it is governed. This is where the HITL framework for AI recruitment audits becomes essential.

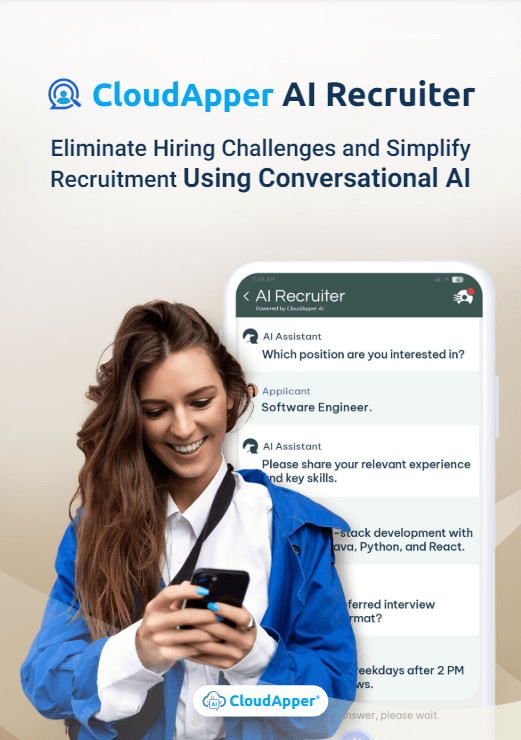

For more information on CloudApper AI Recruiter visit our page here.

Recruitment decisions are high-impact by nature. They affect livelihoods, workplace diversity, and organizational performance. Pure automation introduces speed, but without oversight it also introduces risk. Forward-looking organizations are therefore shifting toward structured Human-in-the-Loop (HITL) models that embed accountability, transparency, and control directly into AI-driven hiring workflows.

Solutions like CloudApper AI Recruiter reflect this evolution. Instead of treating AI as a black box, they are designed to support auditable, human-governed decision-making at scale—especially in high-volume and compliance-sensitive hiring environments.

Why AI Recruitment Audits Are No Longer Optional

AI recruitment tools operate on data, logic, and learned patterns. If any of those inputs are flawed, biased, or outdated, the outputs can quietly propagate errors at scale. This creates multiple risks:

- Discriminatory outcomes that are difficult to detect after the fact

- Inability to explain or defend hiring decisions

- Exposure to regulatory scrutiny in jurisdictions adopting AI governance rules

- Loss of trust among candidates and internal stakeholders

Traditional audits focus on systems and controls. AI audits must go further. They must evaluate decision influence, human oversight, and intervention authority. The HITL framework for AI recruitment audits provides a structured way to do exactly that.

What Human-in-the-Loop Really Means in Recruitment

Human-in-the-Loop is often misunderstood as “humans approve AI decisions.” In reality, effective HITL design is far more granular and intentional. It defines where, when, and how humans intervene throughout the AI lifecycle.

In recruitment, HITL is not about slowing down hiring. It is about ensuring AI accelerates decisions without removing responsibility from people.

A strong HITL model answers three questions:

- Where can AI operate autonomously with low risk?

- Where must humans validate or contextualize AI outputs?

- Where should final authority always remain human?

The HITL framework for AI recruitment audits translates these questions into enforceable checkpoints.

Core Components of the HITL Framework for AI Recruitment Audits

1. Data Validation Intervention Point

Every AI hiring decision begins with data: resumes, job descriptions, historical hiring outcomes, and evaluation criteria.

Human oversight is critical at this stage to ensure:

- Input data reflects current role requirements

- Historical data does not encode past bias

- Proxy variables do not unintentionally correlate with protected characteristics

Recruitment audits should document:

- Who approves training and screening data sources

- How often datasets are reviewed

- What criteria trigger retraining or exclusion

This is the first formal checkpoint in the HITL framework for AI recruitment audits, and it directly impacts downstream fairness and accuracy.

2. Model Output Review and Explainability Check

AI screening and ranking systems generate scores, match percentages, or recommendations. Without explainability, these outputs are operationally useless in an audit context.

Human reviewers must be able to:

- Understand why candidates were ranked in a certain order

- Identify dominant factors influencing decisions

- Detect anomalies or inconsistent outcomes

This intervention point ensures AI is interpretable, not just performant. Recruiters and auditors should be able to trace outcomes back to logic, not intuition.

3. Bias Detection and Override Authority

Bias mitigation cannot rely on automation alone. Even well-designed models can produce skewed outcomes under certain conditions.

A robust HITL structure defines:

- Thresholds that trigger bias review

- Who is authorized to pause, override, or recalibrate AI outputs

- How overrides are documented and justified

This ensures humans retain real authority—not symbolic approval—over AI behavior. Within the HITL framework for AI recruitment audits, this is one of the most critical safeguards.

4. Human Review at Shortlisting and Ranking Stages

Shortlisting is where AI influence becomes most visible. This is also where accountability must be clearest.

Human reviewers should:

- Validate that AI-ranked candidates align with role context

- Adjust rankings when qualitative factors apply

- Confirm that exclusion decisions are defensible

AI can prioritize efficiently, but humans must retain discretion. Audit trails should show when human judgment altered or confirmed AI recommendations.

5. Final Decision Gate (Mandatory Human Ownership)

The final hiring decision must always belong to a human. No exception.

This gate ensures:

- Legal and ethical accountability is clearly assigned

- Decisions reflect organizational values and situational judgment

- AI remains advisory, not authoritative

In the HITL framework for AI recruitment audits, this final gate is non-negotiable. It defines the boundary between automation and responsibility.

Common Audit Failures in AI Recruitment

Organizations that struggle with AI audits often share similar gaps:

- AI tools lack explainability

- Humans are “in the loop” only nominally

- Override mechanisms exist but are rarely used or documented

- Decision logs are fragmented or incomplete

These failures are not technology problems. They are governance design problems.

How CloudApper AI Recruiter Supports HITL-Based Audits

CloudApper AI Recruiter is built with Human-in-the-Loop governance as a core design principle—not an afterthought.

The solution supports the HITL framework for AI recruitment audits in several concrete ways:

Transparent Screening and Ranking Logic

Recruiters can see how candidates are evaluated and which factors influence outcomes, supporting explainability and review.

Built-In Human Override Controls

AI recommendations can be adjusted, overridden, or paused with clear documentation, ensuring real human authority.

Audit-Ready Decision Trails

Every AI-assisted action and human intervention is logged, creating defensible records for internal and external audits.

Scalable Governance for High-Volume Hiring

Even in large applicant pools, the solution preserves structured human checkpoints without slowing operations.

Compliance-Friendly Design

By keeping humans accountable at critical stages, CloudApper AI Recruiter helps organizations align with emerging AI governance expectations without rebuilding workflows.

Rather than forcing recruiters to choose between speed and control, the solution enables both.

Implementing the HITL Framework in Practice

To operationalize the HITL framework for AI recruitment audits, organizations should:

- Map AI influence across the recruitment lifecycle

- Define mandatory human intervention points

- Assign clear ownership for overrides and approvals

- Require explainability at every decision layer

- Regularly test and document audit readiness

Technology should support this structure—not obscure it.

Final Perspective

AI will continue to reshape recruitment, but governance will define whether that transformation builds trust or creates risk. The HITL framework for AI recruitment audits offers a practical, defensible way to balance innovation with accountability.

Human-in-the-Loop is not about resisting automation. It is about designing AI systems that respect the gravity of hiring decisions.

With solutions like CloudApper AI Recruiter, organizations can move beyond opaque algorithms and adopt AI recruitment strategies that are transparent, auditable, and responsibly human-led.

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions for HR

- Works with

- and more.

Similar Posts

How Remote vs RTO Affects Hiring in US & Canada

The Challenges of Hiring Across Multiple Time Zones (And How…