Candidate fraud prevention is essential, but strict verification steps can unintentionally harm diversity and inclusion. Learn how to balance security with fair, inclusive hiring.

Table of Contents

Candidate fraud has surged dramatically in 2026, reshaping how organizations recruit and evaluate talent. According to SHRM, 68% of employers reported a rise in misrepresentation, identity manipulation, AI-generated interview responses, or fabricated credentials in just the last year. Gartner adds that nearly 30% of candidates will rely on generative AI tools during interviews by 2026, either to craft responses or support live interactions.

To combat this trend, employers have introduced stricter identity verification and fraud-mitigation steps — from requiring candidates to perform real-time movement checks on camera, to conducting on-premise interviews, to enforcing ID verification processes once reserved only for high-security roles.

While these measures may be effective at preventing fraud, they raise an important question that recruiters, HR leaders, and DEI professionals can no longer ignore:

What happens to diversity and inclusion when fraud prevention becomes too rigid?

This article explores the often overlooked implications of fraud prevention on DEI, the risks of unintentionally excluding marginalized groups, and how companies can strike a fair and ethical balance. Subtly within this conversation, we’ll also look at how more inclusive assessment methods — like the contextual reasoning approach used in solutions such as CloudApper AI Recruiter — help reduce bias without compromising security.

The State of Candidate Fraud in 2026

Fraud in the hiring process has evolved from simple résumé inflation to sophisticated AI-driven deception. Recruiters today face issues such as:

-

Candidates using real-time AI-generated answers in video interviews

-

Proxy interviewers impersonating applicants

-

Deepfake video responses

-

Fabricated credentials supported by AI-generated documents

-

Perfectly worded résumés generated by language models

Fraud prevention isn’t optional — it’s essential. But the methods companies adopt can either support inclusion or undermine it.

How ID Verification Impacts Diversity and Inclusion in Hiring

Identity verification is one of the most common fraud-prevention approaches — and one of the most problematic for DEI.

1. Economically disadvantaged candidates face ID inequality

Many candidates cannot easily access:

-

Updated photo IDs

-

Passports

-

Driver’s licenses

-

Consistent addresses

-

Reliable technology for scanning or uploading documents

This disproportionately affects low-income individuals, refugees, immigrants, and people experiencing housing instability — groups already underrepresented in hiring pipelines.

2. ID systems disadvantage women, transgender candidates, and LGBTQ+ communities

Common identity challenges include:

-

Women whose surname changed after marriage or divorce

-

Transgender candidates whose legal documents don’t match their lived gender

-

Nonbinary individuals navigating inconsistent ID standards across states

-

LGBTQ+ candidates facing bureaucratic or familial barriers to obtaining updated documents

Rigid fraud-prevention systems flag inconsistencies that reflect life realities — not dishonesty.

3. On-premise requirements exclude disabled candidates

Mandatory on-site identity verification can disadvantage:

-

People with mobility disabilities

-

Candidates who rely on adaptive transport

-

Individuals needing accessibility accommodations

-

Those with chronic illness who cannot attend in-person screenings

Fraud prevention becomes an accessibility issue when systems assume physical attendance is universally possible.

4. Caregivers and parents face scheduling limitations

Requiring in-person or same-day verification creates barriers for:

-

Primary caregivers (disproportionately women)

-

Single parents

-

Individuals who rely on public transportation

-

Candidates working hourly jobs who cannot take sudden time off

Fraud prevention may unintentionally punish those balancing caregiving and career.

The Ethical Dilemma of AI Candidate Fraud Detection

Beyond ID verification, AI-driven fraud detection tools introduce new DEI concerns. Many of these tools analyze:

-

Facial movements

-

Vocal patterns

-

Eye tracking

-

Background environments

-

Speech cadence

-

Behavioral anomalies

But these systems struggle with cultural differences, neurodiversity, disability, and non-standard communication styles.

A more ethical approach is emerging with AI platforms that avoid biometric analysis altogether and all identifiable bias. CloudApper AI Recruiter, for example, uses contextual and scenario-based reasoning rather than facial recognition or vocal analytics and

This shift matters. Traditional AI fraud detection can misinterpret:

-

Accents from global candidates

-

Speech patterns of non-native English speakers

-

Facial features underrepresented in training datasets

-

Neurodivergent communication style

-

Cultural expressions that differ from Western norms

By evaluating how candidates think, not how they look, sound, or their background but contextual assessment methods reduce DEI harm while still identifying fraud.

What Employers Can Do Now To Balance Fraud Prevention and Fair Hiring in 2026

Preventing fraud is important — but not at the cost of fairness. Here’s how organizations can maintain both integrity and inclusion.

1. Offer flexible verification options

Instead of rigid ID demands, provide alternatives:

-

Accept multiple forms of ID

-

Offer virtual verification using secure portals

-

Allow provisional verification pending onboarding

-

Provide community-center or government-office verification options

Flexibility is the cornerstone of inclusive fraud prevention.

2. Evaluate tools for algorithmic bias

Before investing in any fraud detection software, ask:

-

Does it rely on biometrics?

-

Was it tested on diverse demographic groups?

-

Does it allow human oversight?

-

Does it evaluate ability rather than identity?

Systems like CloudApper AI Recruiter — which analyze reasoning and consistency rather than facial features — show how fraud detection can be more inclusive by design.

3. Don’t treat AI flags as absolute truth

AI signals should prompt human review, not automatic disqualification.

Bias is often subtle, and without human oversight, false positives can disproportionately exclude marginalized candidates.

4. Provide alternative interview and screening formats

If fraud-prevention measures require certain formats, ensure accommodations exist:

-

Remote alternatives for those who cannot travel

-

Verification during onboarding instead of pre-screening

-

Extended deadlines for those needing additional documentation

A one-size-fits-all verification process inevitably favors only one type of candidate.

5. Train hiring teams on inclusive fraud mitigation

Recruiters and hiring managers should learn:

-

How verification impacts marginalized groups

-

Signs of algorithmic bias

-

Cultural communication differences

-

Neurodiversity awareness

-

How to use fraud detection ethically

Education is as important as technology.

Why Identity Verification Must Not Become Identity Profiling

Fraud prevention becomes harmful when it drifts into profiling — based on:

-

Accents

-

Neighborhoods

-

Financial background

-

Outdated IDs

-

Transitional identity status

-

Name changes

-

Personal history

Instead of assuming a candidate is suspicious based on ID irregularities, organizations should shift to evaluation models that focus on capability. Tools that measure reasoning, problem solving, and real world experience — such as the contextual assessment approach in CloudApper AI Recruiter — help prevent fraud without reinforcing systemic inequality.

The most inclusive fraud prevention is ability-based — not identity-based.

Conclusion

Candidate fraud prevention is now an unavoidable part of modern recruitment. But the rise of stricter identity checks, biometric tools, and AI-based fraud detection brings new risks for diversity and inclusion.

The challenge for 2026 is not choosing between security and equity — it is designing systems where both can coexist.

Fraud prevention must be flexible, accessible, and ethically implemented. It should measure authentic ability, not penalize identity-based differences. Emerging contextual assessment tools — such as those used in platforms like CloudApper AI Recruiter — demonstrate that technology can support fairness while still protecting employers.

Organizations that approach fraud prevention with care and inclusivity will build hiring systems that are secure, equitable, and human-centered — the future of ethical recruitment.

Reduce Time-to-Hire by 97% with AI for Talent Acquisition

Recruit skilled, culturally fit, and diverse candidates with CloudApper’s state-of-the-art AI resume screening, automated interview scheduling, and offer letter generation.

Learn more | Download BrochureFrequently Asked Questions (FAQ)

-

Can candidate fraud prevention create bias in hiring?

Yes. Fraud-prevention tools that rely on facial recognition, voice analysis, or strict ID matching may misinterpret cultural, linguistic, or neurodivergent differences as suspicious, creating unintentional bias in hiring decisions. -

How can companies maintain diversity while preventing candidate fraud?

Organizations can maintain diversity by offering flexible ID verification options, avoiding biometric-only systems, using inclusive AI tools, and ensuring human review for flagged cases. -

Do strict ID verification requirements disadvantage certain groups?

Yes. Candidates experiencing financial hardship, those with outdated IDs, individuals who changed their names, and transgender or nonbinary applicants may face additional barriers under rigid verification systems. -

What ethical issues arise when using AI to detect candidate fraud?

AI tools may misread accents, facial features, behavior, or communication styles. Without transparency and regular bias audits, fraud detection can unintentionally exclude marginalized candidates or reinforce systemic inequalities. -

How does CloudApper AI Recruiter approach fraud prevention more inclusively?

CloudApper AI Recruiter uses contextual, scenario-based assessments instead of biometric or appearance-based analytics. This allows employers to verify authenticity by evaluating how candidates think and solve problems, reducing DEI risks while still addressing fraud concerns.

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions for HR

- Works with

- and more.

Similar Posts

How cNPS Reflects Your Recruitment and Employer Brand

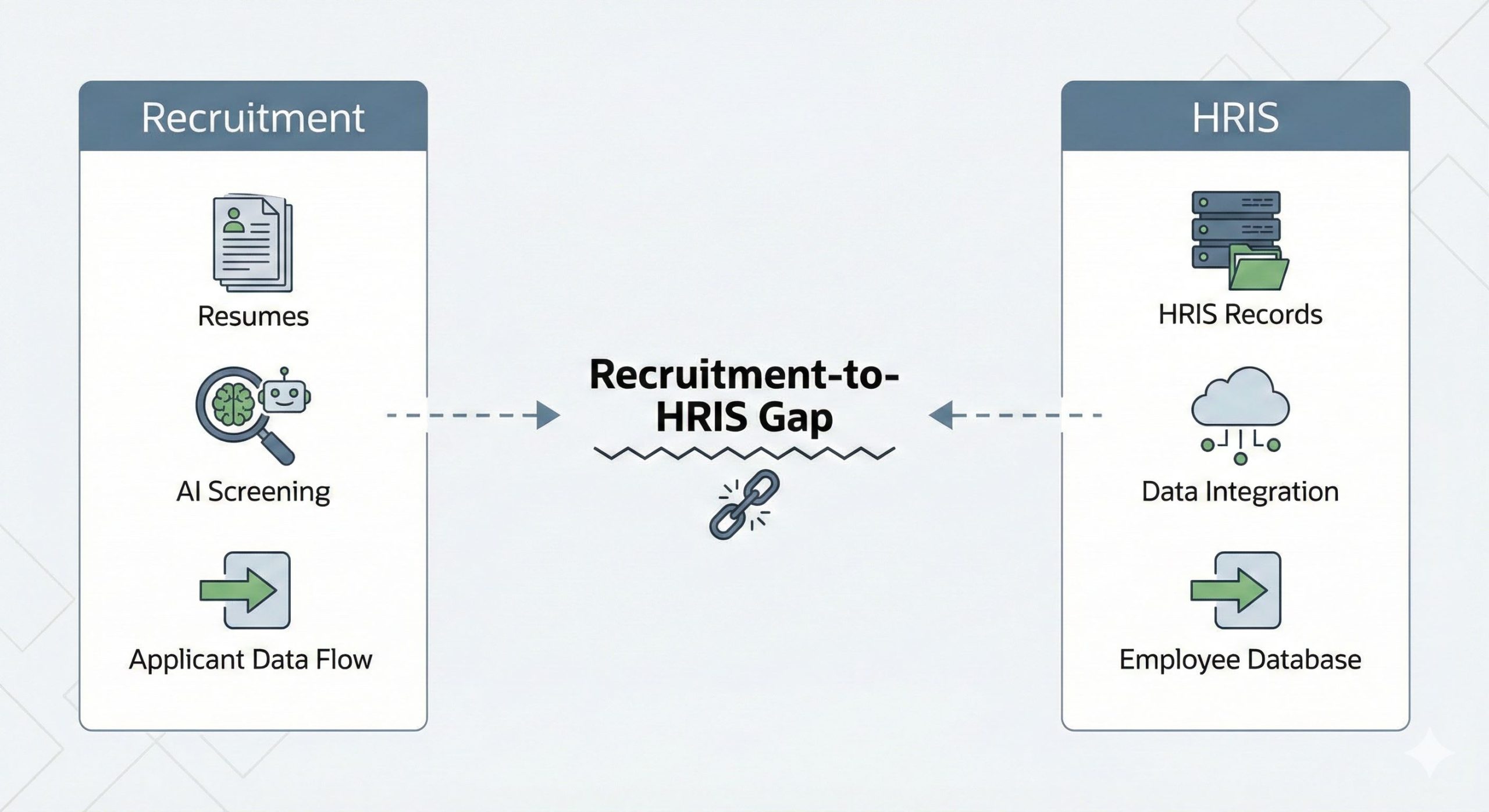

The Recruitment-to-HRIS Gap: Why Your “System of Record” Should Start…