AI-generated candidate fraud is no longer a fringe risk—it’s a daily reality for modern hiring teams. As synthetic resumes, proxy interviews, and deepfake identities flood applicant pipelines, recruiters must rethink trust, verification, and signal quality. This article explores why the problem is accelerating—and how trust-first, AI-assisted hiring workflows can fight back without slowing down.

Table of Contents

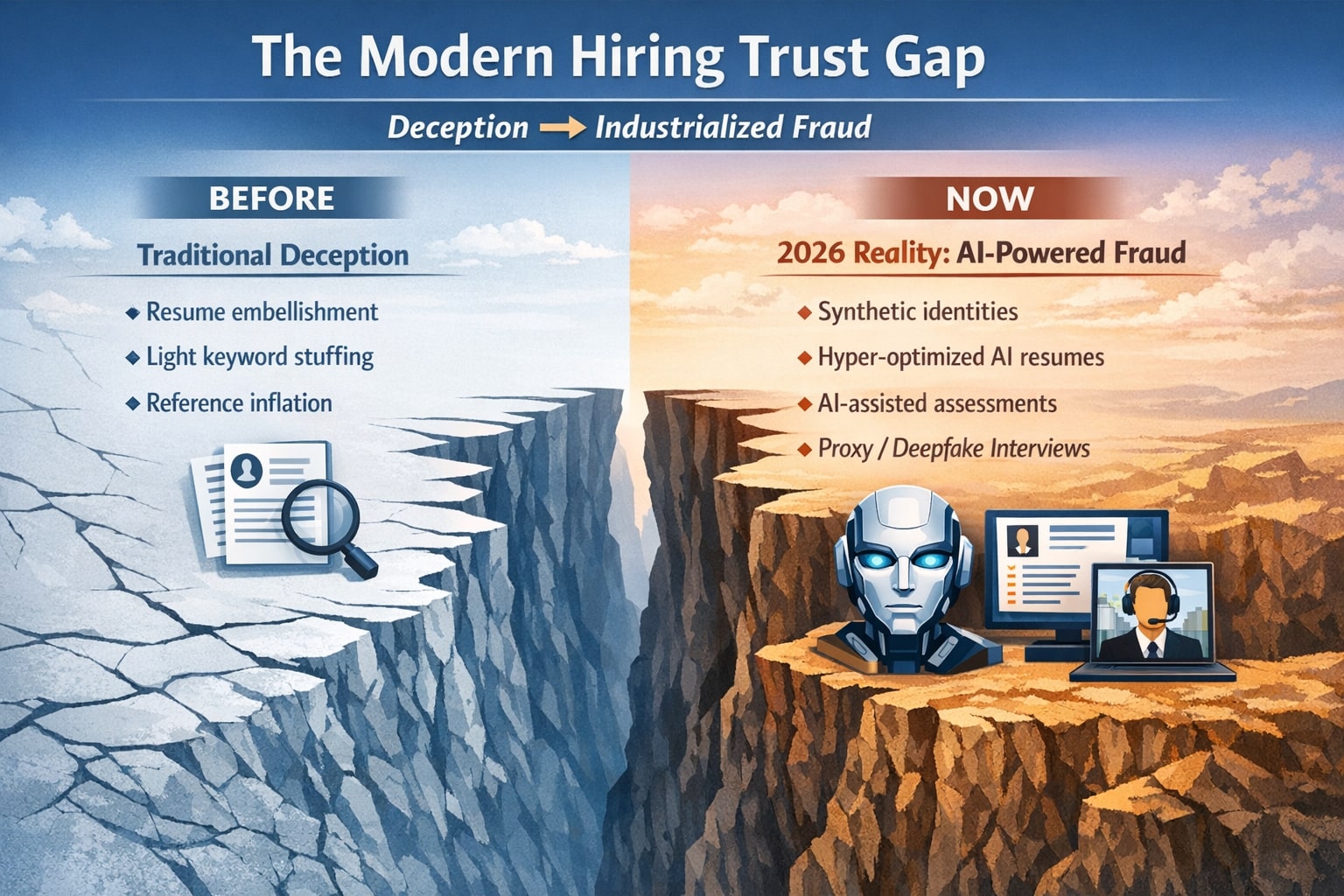

Talent acquisition has always had a deception problem. Candidates embellish. Recruiters filter. References confirm. But in early 2026, the scale and sophistication of candidate fraud has changed fast enough to create a real trust gap between employers and applicants.

For more information on CloudApper AI Recruiter visit our page here.

Why? The same generative AI tools that help recruiters move faster are also helping threat actors manufacture “perfect” candidates: synthetic identities, hyper-optimized resumes, AI-assisted assessments, and even deepfake or proxy interviews designed to slip through automated screening. HR Dive’s recent coverage highlights a looming surge in AI-powered impersonation scams and Gartner’s prediction that by 2028, 1 in 4 job candidate profiles worldwide will be fake.

This is not a distant, niche risk. It’s becoming a day-to-day operational reality for remote and high-volume hiring teams.

Why AI-generated fraud is suddenly “working”

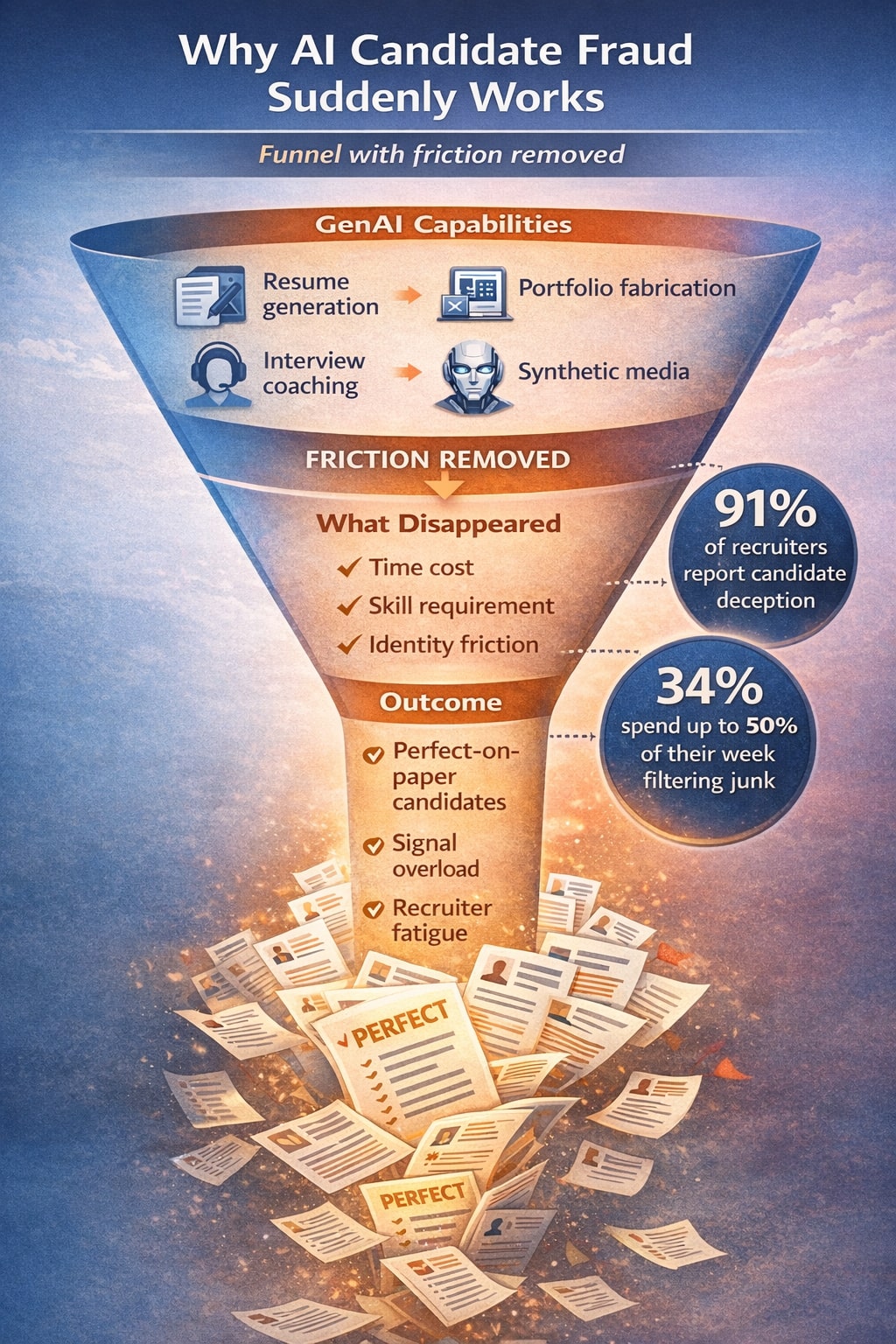

Fraudulent candidates used to fail because creating a believable profile took time and effort. GenAI removes that friction. A bad actor can now generate:

- A resume tuned to the exact job description (keywords, responsibilities, metrics)

- A polished portfolio, GitHub narrative, LinkedIn presence, and email cadence

- Interview scripts and “live” answers (including off-screen AI coaching)

- Synthetic headshots and, in extreme cases, deepfake video presence

The result is an “application pipeline” full of candidates who look immaculate on paper but collapse under verification.

This aligns with what many hiring teams are already reporting. A 2025 Greenhouse study found 91% of recruiters have spotted candidate deception, and 34% spend up to half their week filtering spam and junk applications. It also reported 65% of hiring managers have caught applicants using AI deceptively (including scripts, prompt injections, and deepfakes).

At the same time, volume is exploding. One widely cited signal: employers are increasingly “drowning” in AI-generated applications, with reports that LinkedIn processes 11,000 submissions per minute and has seen a sharp increase in application volume.

When volume rises and signals get noisier, screening becomes easier to game.

Remote hiring is the highest-value target

Remote roles are disproportionately attractive to fraud rings because the upside is high and the verification barriers are lower. Recent reporting has linked remote-work hiring fraud to sophisticated operations, including attempts by suspected North Korean operatives to obtain IT jobs using identity deception and remote infrastructure (“laptop farms”). For example, Amazon’s chief security officer has said the company blocked 1,800+ suspected North Korean agents from applying over a ~20-month period.

This is where recruitment and cybersecurity fully collide: a fraudulent hire is not just a “bad hire.” It can become an access path to systems, data, and customer information—especially if onboarding and device provisioning occur before the person is fully verified.

The trust gap is widening on both sides

Candidates increasingly assume algorithms will reject them unfairly. Employers increasingly assume candidates may be “synthetic” or misrepresented. Gartner’s research captures both sides of the mistrust:

- Only 26% of job candidates trust AI will evaluate them fairly.

- Candidates are using AI too: Gartner found 39% of candidates reported using AI in the application process (often for resumes and cover letters).

- Gartner also noted that 6% of candidates admitted to interview fraud (impersonation or use of someone else).

When both parties believe the other side is “gaming the system,” the hiring process stops feeling like selection and starts feeling like adversarial filtering.

Why some companies are adding “analog” steps back in

You’re seeing a return of what Greenhouse calls “good friction.” In their data, 39% of hiring managers said they’re conducting more in-person interviews to verify authenticity.

That doesn’t mean going back to slow hiring. It means inserting verification checkpoints where identity and capability matter most.

Common 2026 “trust restoration” steps include:

- A verified-identity checkpoint before final interviews or offers (ID + face match, verified credentials, consistent contact details)

- Proctored assessments for technical roles (live coding, proctored exams, structured work simulations)

- In-person final rounds for high-risk roles (or at least a controlled environment session)

- Cross-validation of resume claims through structured follow-up questions (timelines, project specifics, tool choices, decision tradeoffs)

- Audit-ready documentation for why a candidate advanced or was rejected (helps compliance and internal QA)

Microsoft’s security guidance has also emphasized stronger identity verification patterns to combat fake-employee scams, reinforcing that identity assurance is becoming part of modern hiring security.

A practical framework: Detect, Verify, Document, Deter

If you want to tackle AI-generated recruitment fraud without slowing hiring to a crawl, use a layered approach:

Detect

Use automation to spot abnormal patterns early: near-duplicate resumes, inconsistent location signals, unnatural keyword density, unusual application velocity, mismatched communication style, or “too perfect” profiles that don’t show real-world nuance.

Verify

Move verification closer to decision points. Don’t spend human hours interviewing unverified candidates. Add a lightweight verification step before final interviews and a stronger one before offer + onboarding.

Document

Keep transparent notes, scoring rationale, and workflow logs. This protects your team when hiring decisions are questioned and helps you improve the process over time.

Deter

Make expectations explicit: what’s acceptable AI assistance, what isn’t, and what verification steps will occur. Gartner specifically recommends setting clear expectations, using assessments to uncover fraud, and refining validation methods across the funnel.

Where CloudApper AI Recruiter fits (without turning hiring into “security theater”)

This is where the right recruitment automation can help—not by trusting AI blindly, but by designing a workflow where AI accelerates the right steps and supports verification.

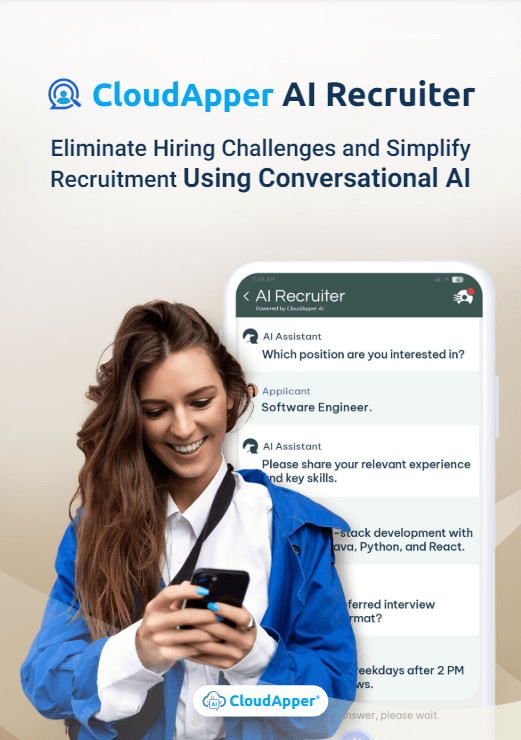

CloudApper AI Recruiter is positioned as a multi-agent recruitment solution that screens, scores, ranks, and schedules candidates while keeping recruiters focused on human judgment and relationship-building.

Here’s how it naturally supports trust-first hiring:

- Faster, structured screening with better signals

When resume volume spikes, recruiters need consistent scoring and comparison—not just keyword filters. CloudApper AI Recruiter’s screening + scoring approach helps prioritize top-fit candidates quickly, so humans spend their time where it matters most. - Workflow checkpoints you can customize

Fraud mitigation works best when it’s part of the workflow, not a separate “security tool” nobody uses. CloudApper emphasizes customizable workflows and seamless integration with existing ATS/HRIS environments, which is exactly what teams need to insert verification steps (ID check, proctored assessment, structured live exercise) at the right stage. - Candidate engagement that reduces drop-off without lowering standards

High friction creates drop-off. SHRM has reported that 92% of people who click “Apply” never finish online job applications, which is why simplifying the candidate experience matters even while adding “good friction” later.

CloudApper’s conversational application flow (including QR/text entry) and automated communication can reduce abandonment early—then you can apply verification at later, higher-value checkpoints. - Audit-friendly hiring decisions

In a trust crisis, “why did we choose this person?” needs a clear answer. CloudApper’s emphasis on candidate comparisons, notes, and hiring metrics supports a decision trail that’s helpful for compliance and internal review.

The takeaway

AI didn’t “break” recruiting. It exposed a fragile part of the system: we relied too heavily on easy-to-forge signals (resumes, profiles, polished answers). In 2026, the teams that win will be the ones who rebuild trust with better signal quality, verification checkpoints, and transparent decision-making—without sacrificing speed.

If you want to modernize hiring for this new reality, look for platforms that help you move fast and build trust. A multi-agent approach, like CloudApper AI Recruiter, can help you automate repetitive tasks (screening, scoring, scheduling, communication) while adding smart verification steps to keep fraudulent candidates out and real candidates moving.

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions for HR

- Works with

- and more.

Similar Posts

Why AI Job Application Noise Is Flooding Hiring Pipelines

Recruiters Didn’t Lose Control to AI, They Gave It the…