AI recruiting tools can save time, but ROI often stalls when trust is low and results aren’t measured properly. This guide explains why that happens and gives a practical 7-step playbook to prove value fast—with clear metrics, better workflow fit, and a simple way to build candidate confidence.

Table of Contents

Most hiring teams do not fail because “AI doesn’t work.” They fail because of AI Recruiting ROI. They cannot translate AI activity into measurable business outcomes.

That pattern is showing up across enterprise AI broadly. An MIT Project NANDA report (“The GenAI Divide”) found that 95% of organizations are getting zero measurable return from GenAI investments, while only a small minority are extracting significant value.

Recruiting is one of the first functions where that “no measurable return” problem becomes visible. Hiring touches brand, compliance, time-to-fill, and revenue impact. When results are unclear, skepticism spreads fast—especially among candidates. Gartner found only 26% of job candidates trust AI will fairly evaluate them, even as many believe AI is already part of screening.

This article provides a practical ROI and trust playbook for talent leaders who want AI to deliver outcomes they can defend to executives, recruiters, legal, and candidates.

The hype vs. reality gap in AI talent acquisition

AI adoption in recruiting is accelerating, but trust and measurement lag behind.

LinkedIn’s Future of Recruiting reports that among talent acquisition professionals already using generative AI, the average time saved is about 20% of their work week—roughly a full workday saved weekly. The same report notes AI literacy among TA pros grew 2.3x over the prior year.

At the same time, candidates remain skeptical. Gartner’s survey result—only 26% trust AI to evaluate them fairly—creates a practical conversion and employer-brand constraint if you do not communicate clearly and implement safeguards.

Voice-friendly question: Do AI hiring tools really work?

They work when they produce measurable outcomes that matter to the business (cycle time, throughput, quality proxies, and experience) and when candidates and recruiters accept how the tool is used. Productivity gains alone are not sufficient if trust collapses or compliance exposure grows.

Top 5 reasons recruiters (and candidates) distrust AI tools

- Low candidate trust becomes a drop-off risk.

If only 26% of candidates trust AI to evaluate them fairly, you should expect skepticism unless you provide clear explanations, human review, and an appeal path. - Fraud pressure makes everyone doubt the inputs.

Gartner reports 6% of candidates admit to interview fraud and predicts 1 in 4 candidate profiles could be fake by 2028. That forces more verification—yet also increases suspicion of AI-driven screening signals. - Workflow mismatch leads to “quiet non-adoption.”

The MIT NANDA research emphasizes that many pilots stall due to integration and learning gaps, not model quality. In recruiting, tools that add steps, require extra logins, or fail to fit recruiter motion get bypassed—then blamed for “no ROI.” - Teams measure activity instead of outcomes.

“We screened faster” is not ROI if it doesn’t translate into shorter cycle time, higher offer acceptance, better quality proxies, or lower cost per hire. - Governance is missing or vague.

In the U.S., existing Title VII frameworks around adverse impact and selection procedures still matter when AI is involved. The EEOC’s technical assistance outlines how adverse impact assessment concepts apply to software/algorithms/AI used in selection procedures.

Realistic ROI benchmarks for AI in recruiting

Use benchmarks to set expectations and avoid overpromising. These are not universal guarantees; they are signals you can use to calibrate targets and adoption plans.

| Use case | What “ROI” looks like in practice | Primary adoption risk | Source |

|---|---|---|---|

| Recruiter productivity (GenAI) | ~20% workload reduction (about 1 day/week saved) | Savings do not convert into faster hiring outcomes | |

| Candidate acceptance of screening | Higher completion rates, lower drop-off, fewer disputes | Only 26% trust AI fairness without transparency | Gartner |

| Fraud mitigation | Fewer wasted interviews; lower identity/security exposure | Fraud grows as synthetic identities and AI-assisted deception grow | Gartner |

| Enterprise ROI conversion (overall AI) | Measurable P&L outcomes (not just pilots and demos) | 95% report zero measurable return without integration and learning loops | MIT Project NANDA |

Voice-friendly question: What is the ROI of AI recruiting tools?

ROI is the measurable improvement in hiring outcomes (cycle time, throughput, quality proxy, and candidate experience) attributable to the tool, net of the tool’s full cost and the change-management effort required to drive adoption.

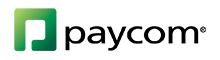

The ROI formula that survives executive scrutiny

Most AI ROI discussions fail because the numerator is vague and the denominator is incomplete. Use this operational model:

- Time savings: recruiter hours saved (using a realistic adoption rate), hiring manager time saved, HR ops time saved

- Cost avoidance: reduced agency spend, fewer reopened requisitions, reduced downstream rework

- Improved outcomes: faster time-to-shortlist, improved interview-to-offer ratio, improved offer acceptance, quality proxies (e.g., 90-day retention)

What belongs in the denominator:

- Tool licensing/subscription

- Implementation and integration effort

- Ongoing governance: monitoring, audits, vendor management, security review

If you hire in New York City (or hire NYC residents in scope), you also need to account for compliance requirements for automated employment decision tools (AEDTs), including bias-audit and notice expectations described by NYC’s Department of Consumer and Worker Protection and the NYC Admin Code requirements.

7-step playbook to maximize AI hiring ROI (without losing trust)

Step 1: Define three outcome metrics that matter

Pick three. Commit to them. Track weekly:

- Time-to-shortlist: requisition open to qualified slate

- Interview-to-offer ratio: a practical screening precision signal

- Quality proxy: 90-day retention, hiring manager satisfaction, early performance indicators

Step 2: Separate “assistive AI” from “decision AI”

Most trust breakdowns come from ambiguity. Document where AI:

- suggests candidates

- summarizes and highlights signals

- ranks applicants

- triggers workflow steps

- screens candidates out of a stage

With candidate trust already low, clarity is not optional.

Step 3: Embed AI into the tools recruiters already use

MIT Project NANDA’s research highlights that pilots often stall because organizations do not close the learning and integration gap. Operationally, that means “one more dashboard” usually becomes “no adoption.”

Step 4: Use a candidate transparency script (simple, not legalese)

Write a short, consistent explanation:

- What data is considered

- What the AI does (assist vs decide)

- Where humans review outcomes

- How candidates can request accommodation or reconsideration

Step 5: Implement adverse-impact monitoring as an operating rhythm

The EEOC’s technical assistance discusses how existing Title VII requirements may apply when AI is used in employment selection procedures, including the concept of assessing adverse impact and job-relatedness/business necessity in disparate impact analysis.

Practical cadence:

- Monthly adverse-impact checks for high-volume roles

- Quarterly stage-by-stage analysis (application → screen → interview → offer)

- Document remediation steps and decision rationale

Step 6: Add fraud controls that don’t punish honest candidates

Gartner’s fraud signals are not theoretical. When 6% admit interview fraud and Gartner predicts 1 in 4 profiles could be fake by 2028, identity and integrity checks become part of operational ROI.

Use proportional controls based on role risk (e.g., higher scrutiny for privileged access roles) and focus on process integrity rather than surveillance.

Step 7: Prove ROI in 90 days with a controlled rollout

Run a real test:

- Choose 1–2 job families

- Establish a baseline period and a live period

- Track adoption alongside outcomes

- Publish before/after results internally (cycle time, throughput, quality proxy)

Then use the MIT insight as your governance principle: value comes from closing learning loops and integrating into real operations, not from “deploying AI” as a one-time event.

Where CloudApper AI Recruiter Fits (Without Adding More Complexity)

If your biggest issue is volume, the fastest path to ROI is not “more AI.” It’s AI that fits your existing workflow and produces decisions you can explain.

CloudApper AI Recruiter is designed for exactly that. It helps hiring teams screen and score applicants more consistently, surface the top-fit candidates faster, and reduce time spent on repetitive steps like shortlisting and scheduling—without forcing you to replace your ATS or rebuild your process. The goal is practical: fewer wasted reviews, faster movement from application to interview, and clearer metrics you can report back to leadership.

When you use a tool like AI Recruiter, focus on outcomes you can measure in 30–90 days: time-to-shortlist, interview-to-offer ratio, and a quality proxy like 90-day retention or hiring manager satisfaction. If those move in the right direction, trust and adoption usually follow.

Quick summary

- Enterprise GenAI often fails to produce measurable return because tools are not operationally integrated and organizations do not close learning loops.

- Candidate trust is a hard constraint: only 26% trust AI will fairly evaluate them.

- Recruiters are seeing productivity upside: LinkedIn reports about 20% time saved weekly among TA pros already using genAI, and 2.3x growth in AI literacy skills.

- Fraud is rising: Gartner reports 6% admit interview fraud and predicts 1 in 4 candidate profiles could be fake by 2028.

- ROI improves when you measure outcomes, embed AI into workflow, communicate transparently, and monitor adverse impact in line with Title VII concepts.

Try the CloudApper AI Recruiter ROI Calculator

FAQ

How do I calculate ROI for AI recruiting tools?

Use an ROI model that includes (1) time savings, (2) cost avoidance, and (3) outcome improvements—then subtract full implementation and governance costs. Track adoption alongside outcomes because low usage produces “zero return” even if the tool is strong.

Why do so many AI pilots fail to show ROI?

MIT Project NANDA reports most organizations struggle to turn pilots into measurable business impact, pointing to learning and integration gaps rather than model quality as a key driver of “no measurable P&L impact.”

What are common AI bias issues in talent acquisition?

Common issues include disparate impact across protected groups, lack of job-related validation, and insufficient monitoring. The EEOC’s technical assistance discusses how adverse impact assessment concepts under Title VII apply when software/algorithms/AI are used as selection procedures.

Which AI tools deliver real ROI for recruiters in 2026?

Tools that reduce repetitive work and are embedded into daily recruiter workflows tend to show the fastest measurable productivity impact. LinkedIn reports that TA pros already using generative AI cite about a 20% workload reduction on average.

How can employers build trust in AI screening with candidates?

Be explicit about how AI is used (assist vs decide), keep humans in the loop for consequential decisions, provide an appeal/accommodation path, and publish your monitoring approach. Candidate trust is a measurable constraint, with Gartner finding only 26% trust AI to fairly evaluate them.

You might also be interested in:

Weekly/Bi-Weekly Time Clock Calculator: Hours, Breaks, Overtime & Pay Tracker

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions for HR

- Works with

- and more.

Similar Posts

How cNPS Reflects Your Recruitment and Employer Brand

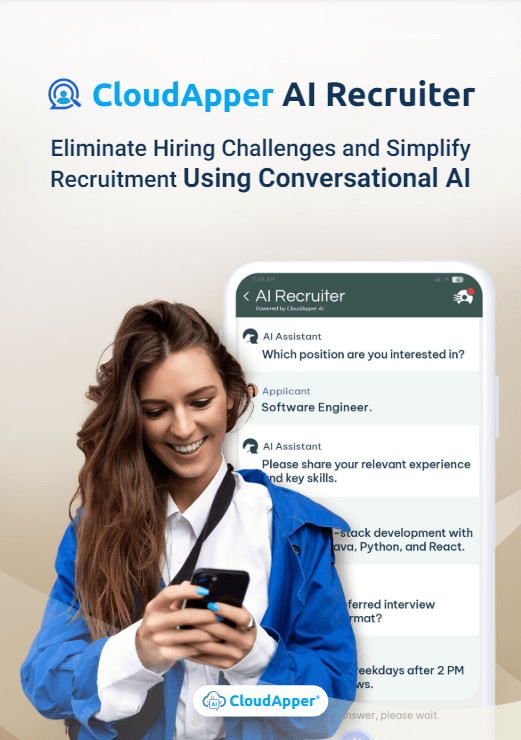

The Recruitment-to-HRIS Gap: Why Your “System of Record” Should Start…