Stay compliant with automated screening for hourly jobs using CloudApper's conversational AI: Assess candidates via relevant scenarios instead of resumes, monitor for disparate impact, document decisions transparently, incorporate human review, and validate criteria for EEOC alignment. Minimize bias, avoid discrimination claims, and hire faster with defensible, fair processes.

Table of Contents

The compliance officer’s email lands in your inbox at 4:47 PM on a Friday: “We need to discuss our automated screening system. The EEOC has questions about disparate impact in our hourly hiring.” Your stomach drops. You implemented AI screening six months ago specifically to improve efficiency and reduce bias—not create compliance nightmares. Now you’re facing potential investigations, audits, and the possibility that the technology you adopted to solve problems has created bigger ones.

This scenario is becoming alarmingly common as organizations rush to adopt automated screening for high-volume hourly hiring without fully understanding the compliance implications. The promise is compelling: process hundreds of applications instantly, eliminate human bias, standardize evaluation, and accelerate hiring. The reality is more complex: new regulations, potential disparate impact, transparency requirements, and legal exposure that many talent acquisition teams aren’t prepared to navigate.

Learning how to stay compliant with automated screening isn’t optional anymore—it’s the price of admission for using these powerful tools. For TA professionals managing continuous hiring across healthcare, retail, and manufacturing, compliance isn’t about checking boxes or avoiding lawsuits, though those matter. It’s about building screening systems that are simultaneously efficient, fair, legally defensible, and actually effective at identifying strong hourly workers.

TL;DR

CloudApper AI Recruiter enables compliant automated screening for hourly jobs by using conversational AI assessments focused on job-relevant skills, eliminating biased criteria, providing disparate impact monitoring, full documentation, human oversight, transparency, and validation support — reducing legal risks while speeding up frontline hiring in retail, healthcare, and manufacturing.

The Compliance Landscape for Automated Screening

The regulatory environment surrounding automated hiring tools has transformed dramatically over the past three years, catching many organizations off guard. What was once a largely unregulated space now involves multiple layers of federal, state, and local requirements that create substantial compliance obligations.

At the federal level, the Equal Employment Opportunity Commission has made algorithmic hiring a priority enforcement area. Their position is clear: automated systems are subject to the same anti-discrimination laws as human decision-makers. If your screening tool produces disparate impact—where protected groups are filtered out at significantly different rates—you must demonstrate that the criteria are job-related and consistent with business necessity. This is a high bar that many screening systems can’t clear.

State and local regulations add complexity. New York City’s Local Law 144 requires annual bias audits of automated employment decision tools and mandates disclosure to candidates. Illinois restricts AI video interview analysis. Maryland requires disclosure when AI is used in hiring. California’s privacy laws affect how candidate data can be collected and used. The patchwork of requirements means organizations operating across multiple states face compliance challenges that vary by jurisdiction.

Beyond explicit AI hiring laws, traditional employment regulations apply with renewed force. The Americans with Disabilities Act, Title VII of the Civil Rights Act, the Age Discrimination in Employment Act—all govern how automated screening must operate. Your AI system can’t ask questions or use criteria that would be illegal if a human recruiter asked them. It can’t discriminate based on protected characteristics, even unintentionally. It must provide reasonable accommodations for candidates with disabilities.

The enforcement landscape is also evolving. We’re seeing class-action lawsuits targeting algorithmic hiring systems, EEOC investigations specifically examining automated tools, and state attorneys general scrutinizing AI employment practices. The legal precedents being established today will shape compliance requirements for years to come.

For organizations processing thousands of hourly applications annually, this regulatory complexity creates genuine challenges. You need automated screening to manage volume efficiently, but every screening decision carries compliance risk. The solution isn’t abandoning automation—the business case is too compelling—but implementing it thoughtfully with compliance built in from the start.

Common Compliance Failures in Automated Screening

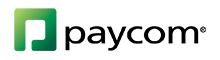

Understanding where automated screening goes wrong helps prevent these failures in your own implementation. The most frequent compliance problems share common patterns:

Using Inappropriate Screening Criteria: Many automated systems filter candidates based on factors that seem reasonable but don’t actually predict job performance—educational requirements for roles that don’t need them, employment gap penalties that disadvantage caregivers, credit checks that disproportionately impact certain populations, or ZIP code filtering that creates geographic bias. When these criteria produce disparate impact without business justification, they create legal liability.

Lack of Validation: Compliance requires demonstrating that your screening criteria actually predict job success. Many organizations implement automated screening without conducting validation studies to prove that filtered candidates perform worse than those advanced. Without this evidence, you can’t defend disparate impact when it occurs.

Insufficient Transparency: Candidates increasingly have rights to know when AI is screening them and how decisions are made. Organizations that operate “black box” screening systems without disclosure violate emerging transparency requirements and undermine candidate trust.

Inadequate Human Oversight: Purely automated screening decisions without human review create compliance risks. Regulations increasingly require meaningful human involvement in hiring decisions, not just rubber-stamping algorithmic recommendations.

Poor Documentation: When auditors or litigants request evidence about how your screening operates, incomplete documentation is devastating. Organizations that can’t produce records of screening criteria, candidate evaluations, or decision rationale face adverse presumptions in legal proceedings.

Failure to Monitor Disparate Impact: Many organizations implement automated screening and never analyze whether it produces different outcomes across demographic groups. This blind spot prevents early detection of compliance problems before they escalate.

Inconsistent Application: When screening criteria vary by location, recruiter, or time period without justification, you create consistency problems that undermine compliance. If candidates in similar circumstances receive different treatment, disparate treatment claims become viable.

Building Compliance into Automated Screening

The good news is that compliance and effectiveness aren’t opposing goals. Well-designed automated screening systems achieve both simultaneously by incorporating compliance considerations from initial design through ongoing operations.

Start with Job Analysis: Before configuring any screening criteria, conduct thorough job analysis to identify what actually predicts success in your hourly roles. Involve frontline managers, high performers, and HR professionals in defining requirements. Document this process meticulously—it becomes your evidence that screening criteria are job-related.

Focus on Job-Relevant Factors: Screen exclusively for factors that genuinely predict performance in your specific roles. For retail positions, this might include customer service orientation, schedule availability, communication skills, and reliability indicators. For warehouse roles: physical capabilities, safety awareness, attention to detail, and procedure-following abilities. Eliminate credentials that don’t correlate with success.

Implement Validation Processes: Track whether your screening criteria actually predict job performance, retention, and other success metrics. Statistical validation demonstrates that your system identifies better candidates, providing the business necessity defense for any disparate impact. Make this ongoing, not one-time activity.

Ensure Transparency: Be open with candidates about automated screening. Inform them that AI is part of your process, explain generally what factors you evaluate, and provide avenues for questions or concerns. Transparency builds trust and satisfies emerging disclosure requirements.

Maintain Human Oversight: Design workflows where humans review screening recommendations and make final decisions. The AI handles initial assessment and provides recommendations, but recruiters evaluate context, apply judgment, and determine outcomes. This hybrid approach captures efficiency while maintaining human accountability.

Monitor for Disparate Impact: Regularly analyze whether your screening produces different pass-through rates across protected groups. If certain populations advance at significantly different rates, investigate immediately. You need to understand whether criteria inadvertently discriminate and whether they can be modified to reduce impact while maintaining predictive validity.

Document Everything: Maintain comprehensive records of screening criteria, candidate evaluations, decision rationale, validation studies, disparate impact analyses, and any adjustments made over time. Treat documentation as insurance—you hope to never need it, but it’s invaluable when compliance questions arise.

Provide Accommodation Pathways: Ensure candidates with disabilities can request reasonable accommodations in your screening process. Alternative formats, extended time, or modified assessments may be necessary to comply with ADA requirements.

Regular Compliance Reviews: Conduct periodic audits of your screening system with legal counsel and compliance professionals. Proactively identify risks and address them before they become enforcement actions or litigation.

The Benefits of Compliant Automated Screening

Organizations that invest in building compliance into automated screening discover benefits extending well beyond risk mitigation:

Reduced Legal Exposure: Properly designed systems with built-in compliance safeguards dramatically reduce the risk of discrimination claims, regulatory investigations, and class-action lawsuits. This risk reduction has quantifiable financial value.

Better Quality of Hire: Focusing screening on genuinely predictive factors rather than credential proxies leads to better hiring decisions. You select candidates who will actually succeed rather than those who look good on paper.

Improved Diversity: Compliant screening that eliminates inappropriate criteria naturally

increases diversity. You stop systematically filtering out candidates from underrepresented backgrounds who lack traditional credentials but possess relevant capabilities.

Enhanced Employer Brand: Candidates notice when screening processes feel fair and transparent. Positive candidate experience, even for rejected applicants, strengthens your reputation in local labor markets.

Operational Efficiency: Compliant systems are often more efficient because they focus on relevant factors rather than processing unnecessary information. Streamlined screening that evaluates what matters accelerates hiring without sacrificing quality.

Competitive Advantage: While competitors struggle with compliance failures or avoid automation entirely due to compliance fears, you operate efficient, defensible screening that fills positions faster with better candidates.

Peace of Mind: Perhaps most valuable, knowing your screening system can withstand regulatory scrutiny lets you focus on strategic talent acquisition rather than worrying about compliance landmines.

How CloudApper AI Recruiter Supports Compliant Screening

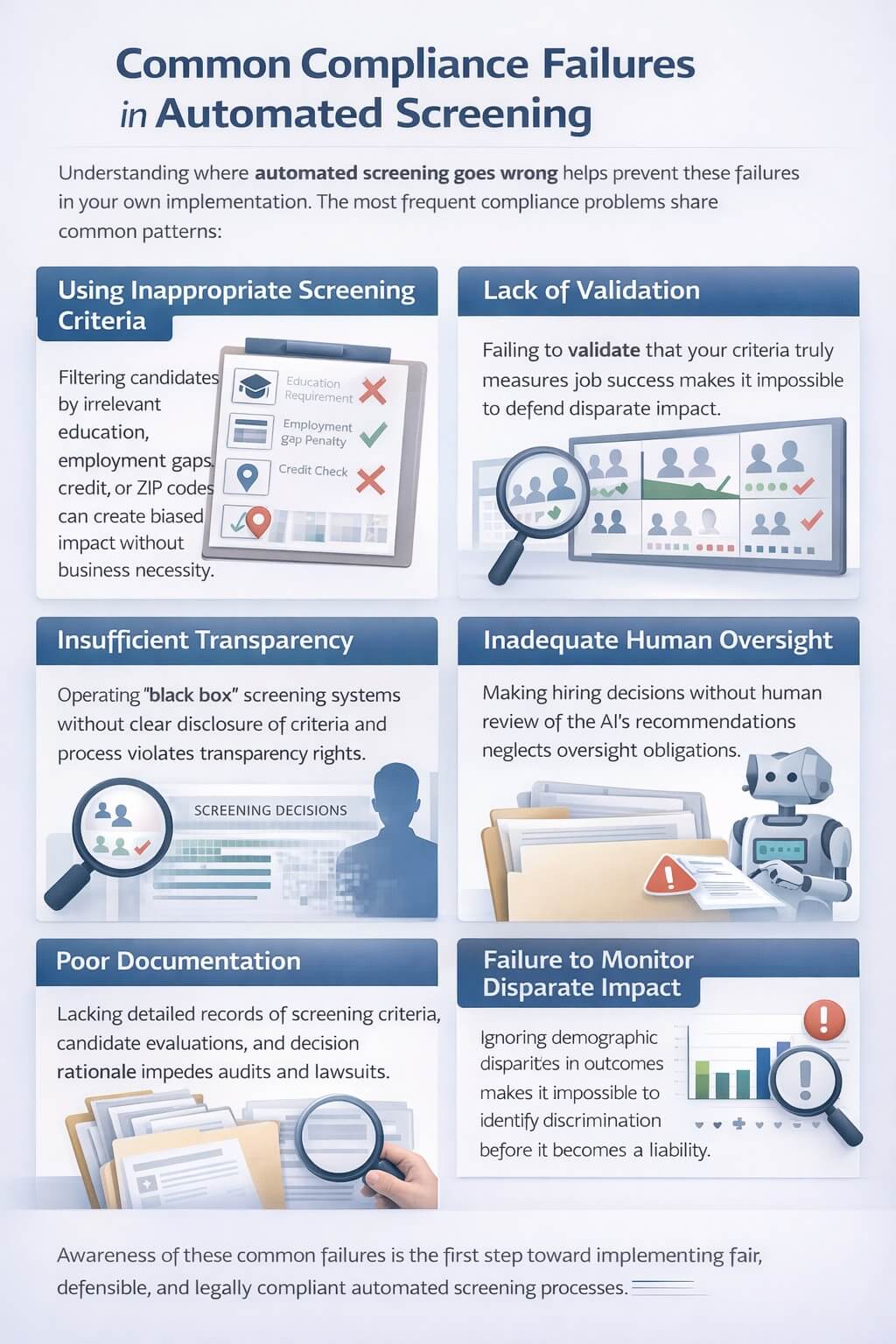

CloudApper AI Recruiter was designed with compliance as a foundational requirement, not an afterthought. The platform enables high-volume hourly screening that meets the most stringent regulatory standards while maintaining operational efficiency.

The system’s conversational AI approach inherently supports compliance by evaluating candidates through job-relevant questions rather than credential parsing. Instead of filtering based on educational background or employment history—factors prone to disparate impact—CloudApper assesses actual capabilities through scenario-based conversations.

For retail positions, the platform asks candidates about customer service approaches, communication style, problem-solving methods, and availability. For warehouse roles, it evaluates safety awareness, physical capabilities, teamwork orientation, and reliability factors. These assessments focus squarely on job-relevant skills, reducing the risk of inappropriate screening criteria.

CloudApper maintains comprehensive documentation of every candidate interaction automatically. Each screening conversation is recorded, every response is captured, and all scoring is explained. When compliance questions arise, you can produce complete records showing how candidates were evaluated, what criteria were applied, and why specific decisions were made. This documentation transparency satisfies regulatory requirements and supports legal defense if needed.

The platform includes built-in disparate impact monitoring. Talent acquisition teams can analyze pass-through rates across demographic groups, identify whether any screening criteria produce differential outcomes, and investigate potential compliance issues proactively. This continuous monitoring enables early detection and correction before problems escalate.

Human oversight is central to CloudApper’s workflow design. The AI handles initial candidate engagement and skills assessment, but recruiters review comprehensive candidate profiles and make hiring decisions. This hybrid approach captures automation efficiency while preserving the human judgment that regulations increasingly require.

CloudApper also supports transparency requirements by providing candidates clear information about the screening process. The conversational format naturally explains what’s being evaluated and why, creating positive candidate experience while satisfying disclosure obligations.

For organizations operating across multiple states with varying regulations, CloudApper’s flexibility enables customization to meet jurisdiction-specific requirements. You can adjust screening approaches, disclosure language, and documentation practices to align with local laws while maintaining operational consistency.

Perhaps most importantly, CloudApper enables the validation studies necessary for compliance. The platform tracks which assessed factors correlate with job performance and retention, providing the statistical evidence needed to demonstrate that your screening criteria are predictive and job-related. This validation capability is essential for defending against disparate impact allegations.

Practical Compliance Checklist

To help talent acquisition teams stay compliant with automated screening, use this comprehensive checklist:

Before Implementation:

- Conduct job analysis documenting essential functions and success factors

- Identify job-relevant screening criteria with business justification

- Review criteria with legal counsel for potential compliance issues

- Establish validation methodology to prove predictive validity

- Design human oversight workflows

- Create candidate disclosure and transparency protocols

During Implementation:

- Configure screening to focus exclusively on job-relevant factors

- Test system with diverse candidate samples

- Train recruiters on compliance requirements and oversight responsibilities

- Establish documentation and record retention procedures

- Implement disparate impact monitoring processes

- Create accommodation request pathways

Ongoing Operations:

- Conduct quarterly disparate impact analysis

- Perform annual validation studies

- Review and update screening criteria based on performance data

- Maintain comprehensive documentation of all screening activities

- Provide regular compliance training to recruiting teams

- Stay informed about evolving regulations in your jurisdictions

- Conduct periodic compliance audits with legal counsel

Frequently Asked Questions

Q: Do we need to disclose to candidates that we use automated screening?

A: Disclosure requirements vary by jurisdiction. New York City requires notice when automated tools make or substantially assist employment decisions. Other states have similar or emerging requirements. Best practice is proactive disclosure regardless of legal requirements—transparency builds trust and demonstrates good faith. Inform candidates generally that AI assists in screening without revealing proprietary details about your system.

Q: What constitutes “disparate impact” that creates compliance risk?

A: Disparate impact occurs when neutral screening criteria produce significantly different pass-through rates across protected groups. The EEOC uses the “four-fifths rule” as an initial indicator: if the selection rate for one group is less than 80% of the rate for the highest-performing group, disparate impact may exist. However, statistical significance matters too. Work with counsel to analyze your specific data rather than relying on rules of thumb.

Q: Can we use automated screening for all hiring decisions, or must humans be involved?

A: Current best practice and emerging regulations favor human involvement in final decisions. AI can handle initial screening and recommendations, but humans should review candidates and make hiring determinations. This hybrid approach satisfies regulatory preferences for meaningful human oversight while capturing automation efficiency. Purely automated decisions without human review create significant compliance risk.

Q: How often should we validate that our screening criteria predict job success?

A: Initial validation should occur before deployment. Ongoing validation should happen at least annually, with continuous monitoring of basic metrics. If you make significant changes to screening criteria, validate again. Regular validation provides the business necessity evidence needed to defend disparate impact and ensures your system continues predicting success as job requirements evolve.

Q: What records should we maintain for compliance purposes?

A: Retain comprehensive documentation including: job analysis supporting screening criteria, system configuration and scoring logic, individual candidate evaluations and screening results, validation studies demonstrating predictive validity, disparate impact analyses, training materials for recruiting teams, and any changes to criteria over time with rationale. Generally retain hiring records for at least two years, though longer retention is advisable. Consult counsel about specific retention requirements in your jurisdictions.

Q: If we discover our screening produces disparate impact, are we automatically in violation?

A: Not necessarily. Disparate impact alone doesn’t prove discrimination if your criteria are job-related and consistent with business necessity, and no alternative criteria could achieve similar results with less impact. However, disparate impact requires immediate investigation. Document your analysis, consult legal counsel, and be prepared to demonstrate business justification or modify criteria. Ignoring known disparate impact creates significant legal exposure.

FAQ

What does it mean to stay compliant with automated screening for hourly jobs?

It involves using AI tools that avoid disparate impact under EEOC guidelines, focus on job-related factors, provide transparency, enable bias monitoring, ensure human oversight, and maintain documentation to defend against discrimination claims in high-volume hiring.

How does CloudApper help stay compliant with automated screening?

CloudApper AI Recruiter uses conversational assessments on job-relevant skills/scenarios (not resumes), automatically monitors pass rates across groups for disparate impact, documents all interactions/scores, supports human review, explains decisions to candidates, and aids validation studies.

What compliance risks does automated screening pose in hourly hiring?

Risks include disparate impact from irrelevant criteria (e.g., education, gaps, ZIP codes), lack of validation showing job-relatedness/business necessity, insufficient transparency, no human oversight, and failure to accommodate disabilities — potentially leading to EEOC investigations or lawsuits.

Does CloudApper support EEOC and disparate impact compliance?

Yes — it eliminates inappropriate filters, applies the four-fifths rule for monitoring, tracks demographic pass-through rates, allows proactive adjustments, and supports validation to prove predictive validity and business necessity.

What steps should HR take to stay compliant with automated screening?

Conduct job analysis; select only relevant factors; implement monitoring/audits; ensure transparency/human oversight; document processes; provide accommodations; perform regular reviews; and validate criteria — CloudApper streamlines these with built-in tools.

Building Sustainable Compliant Screening

The regulatory landscape for automated hiring will continue evolving as technology advances and enforcement priorities shift. Organizations that view compliance as a one-time implementation task will face ongoing challenges. Those that build compliance into their operational DNA—with continuous monitoring, regular validation, proactive adjustments, and commitment to transparency—will navigate regulatory changes successfully.

For talent acquisition professionals and HR leaders managing high-volume hourly hiring, staying compliant with automated screening requires balancing multiple priorities: operational efficiency, legal defensibility, candidate experience, and hiring effectiveness. The organizations succeeding at this balance recognize that compliance and quality aren’t opposing goals but complementary aspects of excellent recruiting.

Automated screening for hourly jobs isn’t going away—the volume demands are too great and efficiency gains too valuable. The question is whether your implementation can withstand regulatory scrutiny while delivering the hiring outcomes your organization needs. With thoughtful design, proper oversight, continuous monitoring, and the right technology partners, the answer can be yes.

To learn more about how CloudApper AI Recruiter delivers compliant automated screening designed specifically for high-volume hourly hiring, visit https://www.cloudapper.ai/ai-recruiter-conversational-chatbot/

What is CloudApper AI Platform?

CloudApper AI is an advanced platform that enables organizations to integrate AI into their existing enterprise systems effortlessly, without the need for technical expertise, costly development, or upgrading the underlying infrastructure. By transforming legacy systems into AI-capable solutions, CloudApper allows companies to harness the power of Generative AI quickly and efficiently. This approach has been successfully implemented with leading systems like UKG, Workday, Oracle, Paradox, Amazon AWS Bedrock and can be applied across various industries, helping businesses enhance productivity, automate processes, and gain deeper insights without the usual complexities. With CloudApper AI, you can start experiencing the transformative benefits of AI today. Learn More

CloudApper AI Solutions for HR

- Works with

- and more.

Similar Posts

How cNPS Reflects Your Recruitment and Employer Brand

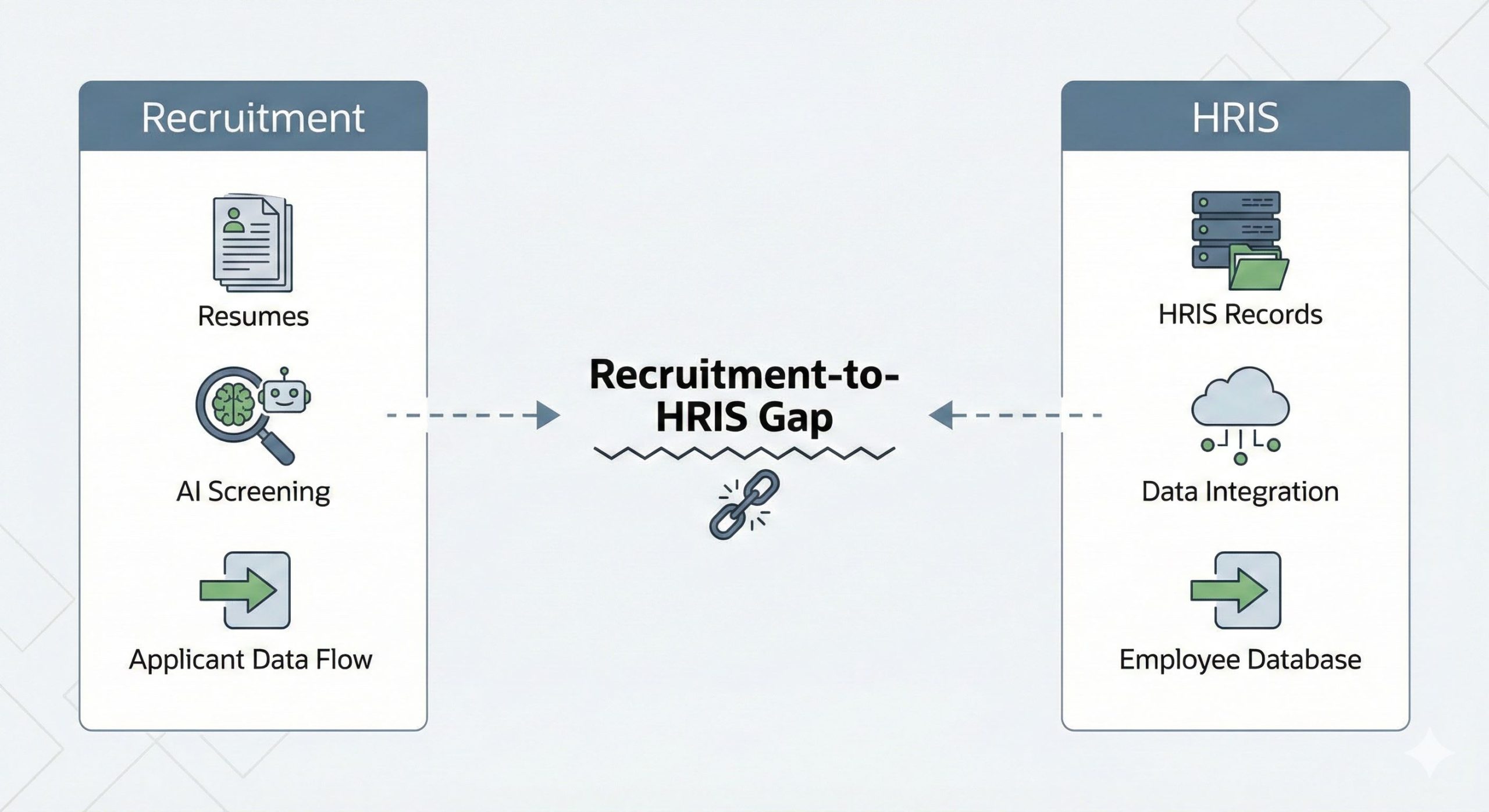

The Recruitment-to-HRIS Gap: Why Your “System of Record” Should Start…